- Products

Cloud Security

Learn how Striim Cloud uses best-in-class security features for networking, encryption, and secret storage.

- Solutions

- Striim Solutions for AI and ML

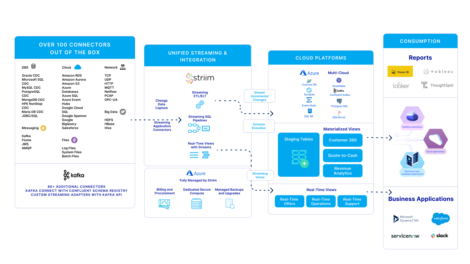

- Striim on AWS

- Striim Cloud

- Striim and Microsoft Azure

- Financial Services

- Retail and CPG

- Striim Solutions for Healthcare and Pharmaceuticals

- Striim Solutions for Hospital Systems

- Striim Solutions for Travel, Transportation, and Logistics

- Striim Aviation

- Striim Solutions for Manufacturing and Energy

- Striim Solutions for Telecommunications

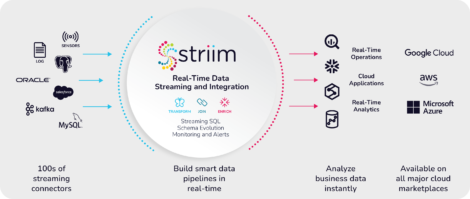

- Striim Technology

- Striim Media

TECHNOLOGIES - Pricing

- Customers

- Connectors

- Resources

- What’s New in Data

- Striim Academy

- Support & Services

- Community

- Blog

- Documentation

- eBooks & Papers

- Videos

- Striim Podcast

- Recipes & Tutorials

- On-demand Webinars

- Company

- Free Trial