Your data wasn’t meant to languish in siloed, on-prem databases. If you’re exploring cloud migration, you’re likely feeling the friction of legacy systems, the frustration of fragmented data, and the operational drag of inefficient workflows. The pressure is mounting from all sides: your organization needs real-time data for instant decision-making, regulatory complexity is growing, and the demand for clean, reliable, AI-ready data pipelines has never been higher.

That’s where modern cloud data management comes in. It’s not just about getting data into the cloud (although this is a good idea for several reasons, from availability and scalability, to more flexible architecture). It’s about rethinking how you ingest, secure, and deliver that data where it can make an impact—powering instant decisions and artificial intelligence.

Time to get our head in the clouds. This article aims to provide practical guidance for navigating this critical shift. We’ll explore what cloud data management means today, why a real-time approach is essential, and how you can implement a strategy that delivers immediate value while future-proofing your business for the years to come.

| Explore how Striim can support your Cloud Migration, without disrupting your business. | Learn More |

What is Cloud Data Management?

Cloud data management is the practice of ingesting, storing, organizing, securing, and analyzing data within cloud infrastructure. That said, the definition is evolving. The focus of cloud data management is shifting heavily toward enabling real-time data accessibility to power immediate intelligence and AI-driven operations. Having data in the cloud isn’t enough; it must be continuously available, reliable, and ready for action.

This marks a significant departure from traditional data management, which was often preoccupied with storage efficiency and periodic, batch-based reporting. The new way prioritizes the continuous, real-time processing of data and its transformation from raw information into actionable, AI-ready insights. As data practitioners, it’s our job not just to archive data, but to activate it.

Core Components of Cloud Data Management

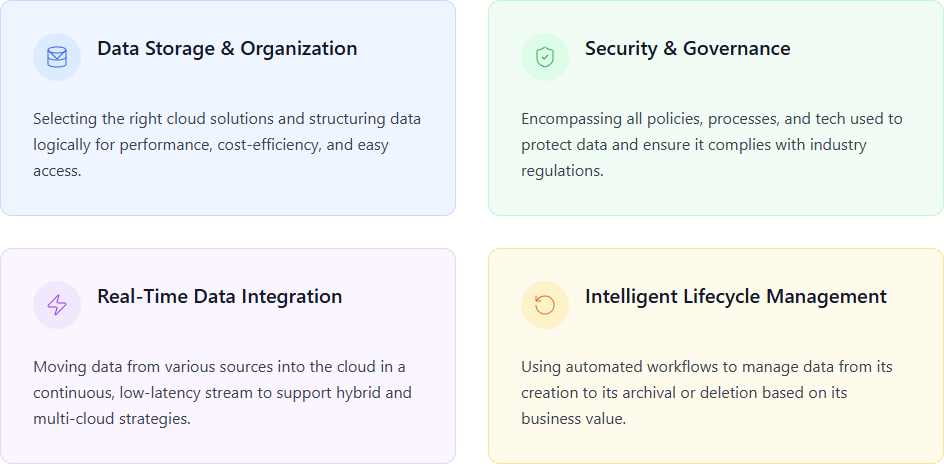

When it comes to the various elements of cloud data management, there’s a lot to unpack. Let’s review the core components of cloud solutions, and outline how they work together to enable agile, secure, and intelligent cloud data management.

Data Storage and Organization

What it is: This involves selecting the right cloud storage solutions—like data lakes, data warehouses, or specialized databases—and structuring the data within them. This is an opportunity to organize logically for performance, cost-efficiency, and ease of access—not just dumping it in a repository.

Why it’s important: A solid storage strategy prevents the organization winding up with a “data swamp” where data is inaccessible and unusable. It ensures that analysts and data scientists can find and query data quickly, and that costs are managed effectively by matching the storage tier to the data’s usage patterns.

Security and Governance

What it is: Your security measures and governance strategy encompass all the policies, processes, and tech used to protect sensitive data and ensure it complies with regulations. It includes identity and access management, data encryption (both at rest and in motion), and detailed audit trails.

Why it’s important: In the cloud, the security perimeter is more fluid. Robust governance is non-negotiable for mitigating breach risks, ensuring regulatory compliance (like GDPR, HIPAA, and SOC 2), and building trust with customers. It ensures that only the right people can access the right data at the right time.

Cloud Adoption and Migration

What it is: This is the practice of moving data from various sources (on-premises databases, SaaS applications, IoT devices) into the cloud in a continuous, low-latency stream. It also includes synchronizing data between different cloud environments to support hybrid and multi-cloud strategies.

Why it’s important: The world doesn’t work in batches. Real-time integration ensures that decision-making is based on the freshest data possible. For migrations, it enables zero-downtime transitions, allowing legacy and cloud systems to operate in parallel without disrupting operations.

Intelligent Data Lifecycle Management

What it is: This is where automated workflows manage data from its creation to its archival or deletion. It involves creating policies and cloud applications that automatically classify data, move it between hot and cold storage tiers based on its value and access frequency, and securely purge it when it’s no longer needed.

Why it’s important: Not all data is created equal. Intelligent lifecycle management optimizes storage costs by ensuring you aren’t paying premium prices for aging or low-priority data. It also reduces compliance risk by automating data retention and deletion policies, so you don’t accidentally hold onto sensitive data.

The Benefits of Effective Cloud Data Management

Managing data in the cloud has a range of benefits which extend beyond better infrastructure. The strategy has tangible business impact, from operational savings to making advanced analytics and AI use cases possible.

Unprecedented Scalability and Operational Agility

Cloud platforms provide near-limitless scalability, allowing you to handle massive data volumes without the need for upfront hardware investment. This elasticity means you can scale resources on demand — up during peak processing times and down during lulls. It also gives teams the agility to experiment, innovate, and respond to market changes faster than ever before.

Reduced Operational Costs

By moving from a capital expenditure (CapEx) model of buying and maintaining hardware to an operational expenditure (OpEx) model, organizations can significantly lower their total cost of ownership (TCO). Cloud data management eliminates costs associated with hardware maintenance, data center real estate, and the associated staffing, freeing up capital and engineering resources for more strategic initiatives.

Business Continuity and Resilience

Leading cloud providers offer robust, built-in disaster recovery and high-availability features that are often too complex and expensive for most organizations to implement on-premises. By taking advantage of distributed data centers in multiple locations, as well as automated failover, cloud data management ensures that your data remains accessible and your operations can continue—even during localized outages or hardware failures.

Next-Gen Analytics, AI, and Machine Learning

Perhaps the most significant benefit is the ability to power the next generation of data applications. Cloud platforms provide access to powerful, managed services for AI and machine learning. Building a robust cloud data ecosystem ensures that these services are fed with a continuous stream of clean, reliable, and real-time data—the essential fuel for developing predictive models, generative AI applications, and sophisticated analytics.

Strategic Imperatives for Successful Cloud Data Management Implementation

Success in the cloud is predicated on aligning people, processes, and priorities to drive business outcomes. That’s why a strong cloud data management strategy requires careful planning and a clear focus on the following imperatives.

Align IT Operational Needs with C-Suite Strategic Objectives

Technical wins are satisfying, but they’re only meaningful if they translate into business value. The C-suite wants to know how a successful technical outcome speeds up time-to-market, grows revenue, or mitigates risk. The key is to create shared KPIs that bridge the gap between IT operations and business goals. For example, an IT goal of “99.99% data availability” becomes a business goal of “uninterrupted e-commerce operations during peak sales events.” Fostering this alignment through joint planning sessions and cross-functional governance committees ensures everyone is pulling in the same direction.

Plan for Real-Time Data Needs and Future Scalability

The days of relying solely on batched data are over. The world runs on immediate insights, and your infrastructure must be built to support continuous data ingestion and processing. This means moving beyond outdated systems that can’t keep pace. When auditing your data infrastructure, don’t just look for storage patterns and compliance gaps; actively identify opportunities to unlock value from real-time data streams. Future-proofing your architecture for real-time and AI will prepare you not just for the immediate future, but for five, ten years from now when AI-native systems will be the norm.

Select the Right Ecosystem

Your choice of Cloud Service Provider (CSP) and specialized data platforms is critical. When evaluating options, look beyond basic features and consider key criteria like scalability, latency, and regulatory alignment. Crucially, you should prioritize platforms that excel at seamless, real-time data integration across a wide array of sources and destinations—from legacy databases and SaaS apps to modern cloud data warehouses. The right ecosystem should handle the complexity of your enterprise data, support hybrid and multi-cloud strategies, and minimize the need for extensive custom coding and brittle, point-to-point connections.

Establish Robust Governance and Continuous Compliance

Governance in the cloud must be dynamic and continuous. Implement models like COBIT or ITIL that extend to real-time data flows, ensuring data quality, role-based access controls, and auditable trails for data in motion. Consider platforms that have built-in security controls and features that simplify adherence to strict industry regulations like HIPAA, SOC 2, and GDPR. This proactive approach to governance ensures that all your data—whether at rest or actively streaming—is secure and compliant by design.

Common Challenges in the Cloud Data Journey (and How to Overcome Them)

Even the best-laid (data) plans go awry. The path to mature cloud data management is paved with common pitfalls, but the right planning and strategic architectural choices will help you navigate them successfully. Let’s review the main challenges, and how to tackle them.

Data Silos

One big draw of the cloud is the promise of a unified data landscape, but it’s unfortunately all too easy to recreate silos by adopting disparate, point-to-point solutions for different needs. The fix is to adopt a unified data integration platform that acts as a central fabric. You can think of it as the central glue for your data sources—ensuring consistent, integrated data across the organization.

How Striim helps: Striim serves as the integration backbone that unifies your data across the enterprise. With hundreds of connectors to both legacy and modern systems, Striim eliminates data silos by enabling continuous, real-time data movement from any source to any target—all through a single, streamlined platform.

Data Security, Compliance & Governance

Secure, compliant, well-governed data isn’t flashy, but it’s paramount to a successful cloud data strategy. Maintaining control over data that is constantly moving across different environments requires a “data governance-by-design” approach. Prioritize platforms with built-in features for data masking, role-based access, and detailed, auditable logs to ensure compliance is continuous, not an afterthought.

How Striim helps: Striim takes a proactive and intelligent approach to data protection. Sherlock, Striim’s sensitive data detection engine, scans source systems to identify and report on data that may contain regulated information such as PHI (Protected Health Information) or PII (Personally Identifiable Information). It provides a comprehensive inventory of all sources potentially holding sensitive data, giving organizations the visibility needed to manage risk effectively. Once sensitive data is identified, Sentinel, Striim’s AI-powered data security agent, can automatically mask, encrypt, or tag that data to ensure compliance with internal policies and external regulations—helping organizations protect sensitive information without disrupting real-time integration flows.

Striim is designed with enterprise-grade security and meets the highest industry standards. It is SOC 2 Type II certified, GDPR certified, HIPAA compliant, PII compliant, and a PCI DSS 4.0 Service Provider Level 1 certified platform. For encryption, Striim supports TLS 1.3 to secure data in transit and AES-256 to protect data at rest. Additionally, Striim enables secure, private connectivity through Azure Private Link, Google Private Service Connect, and AWS PrivateLink .

With these integrated capabilities, Striim not only ensures seamless and real-time data integration across diverse systems—it also delivers robust security, governance, and regulatory compliance at every stage of the data lifecycle.

Real-Time Synchronization & Processing

Many legacy tools and even some cloud-native solutions are still batch-oriented at their core. They cannot meet the sub-second latency demands of modern analytics and operations. Overcoming this requires streaming-native architecture, using technologies like Change Data Capture (CDC) to process data the instant it’s created.

How Striim helps: Striim was purpose-built for real-time data movement. Striim’s customers benefit from a patented, in-memory integration and intelligence platform that leverages the most advanced log-based Change Data Capture (CDC) technologies in the industry. Designed to minimize impact on source systems, Striim can read from standbys or backups where possible, ensuring performance and availability are never compromised. With sub-second latency, your cloud data remains a continuously updated, up-to-the-millisecond reflection of your source systems—enabling truly real-time insights and decision-making.

Scalability and Cost Control

The cloud’s pay-as-you-go model is a double-edged sword. While it offers incredible scalability, costs can spiral out of control if you’re not careful. Address this with intelligent data lifecycle policies, efficient in-flight data processing to reduce storage loads, and continuous monitoring of resource consumption.

How Striim helps: By processing and transforming data in flight, Striim enables you to filter out noise and deliver only high-value, analysis-ready data to the cloud—significantly reducing data volumes and lowering both cloud storage and compute costs. Built for enterprise resilience, Striim supports a highly available, multi-node cluster architecture that ensures fault tolerance and supports active-active configurations for mission-critical workloads. Striim’s platform is designed to scale effortlessly—horizontally, by adding more nodes to the cluster to support growing data demands or additional use cases, and vertically, by increasing infrastructure resources to handle larger workloads or more complex transformations. This flexible, real-time architecture ensures consistent performance, reliability, and cost efficiency at scale.

Data Quality and Observability

“Garbage in, garbage out” is a cliché, but it’s amplified in the cloud. Poor data quality can corrupt analytics and erode trust across the organization. The solution is to build observability into your pipelines from day one, with tools for in-flight data validation, schema drift detection, and end-to-end lineage tracking.

How Striim helps: Striim delivers robust, continuous data validation and real-time monitoring to ensure data integrity and operational reliability. With its built-in Data Validation Dashboard, users can easily compare source and target datasets in real time, helping to quickly identify and resolve data discrepancies. Striim also offers comprehensive pipeline monitoring through its Web UI, providing end-to-end visibility into every aspect of your data flows. This includes detailed metrics for sources, targets, CPU, memory, and more—allowing teams to fine-tune applications and infrastructure to consistently meet data quality SLAs.

Schema Migration

Striim supports schema migration as part of its end-to-end pipeline capabilities. This feature allows for seamless movement of database schema objects—such as tables, fields, and data types—from source to target, enabling organizations to quickly replicate and modernize data environments in the cloud or across platforms without manual intervention.

Schema Evolution

In dynamic environments where data structures are frequently updated, Striim offers robust support for schema evolution and drift. The platform automatically detects changes in source schemas—such as added or removed fields—and intelligently propagates those changes downstream, ensuring pipelines stay in sync and continue to operate without interruption. This eliminates the need for manual reconfiguration and reduces the risk of pipeline breakages due to structural changes in source systems.

Vendor Lock-In in Hybrid/Multi-Cloud Environments

A valid fear many data leaders share is over-reliance on a single cloud provider’s proprietary services. You can mitigate this risk by choosing platforms that are cloud-agnostic and built on open standards. A strong multi-cloud integration strategy ensures you can move data to and from any environment, with the flexibility to choose the best service for the job without being locked in.

How Striim helps: Striim is fully cloud-agnostic, empowering seamless, real-time data movement to, from, and across all major cloud platforms—AWS, Azure, Google Cloud—as well as on-premises environments. This flexibility enables you to architect a best-of-breed, hybrid or multi-cloud strategy without the constraints of vendor lock-in, so you can choose the right tools and infrastructure for each workload while maintaining complete control over your data.

Additionally, Striim offers flexible deployment options to fit your infrastructure strategy. You can self-manage Striim in your own data center or on any major cloud hyperscaler, including Google Cloud, Microsoft Azure, and AWS. For teams looking to reduce operational overhead, Striim also provides a fully managed SaaS offering available across all leading cloud platforms.

To get started, you can explore Striim with our free Developer Edition

Emerging Trends Shaping the Future of Cloud Data Management

The world of cloud data is evolving. Even as you read this article, new technologies and tactics are likely emerging. You don’t have to stay on top of every hype-cycle, but it’s worth keeping an eye on the latest trends for how we manage, process, and govern data. Here are a few key developments data leaders should be monitoring.

Striim is at the forefront of AI-driven data infrastructure, aligning directly with the shift toward intelligent automation in data pipelines. Its built-in AI agents handle critical functions that reduce manual effort and enhance real-time decision-making. Sherlock AI and Sentinel AI classify and protect sensitive data in motion, strengthening data governance and security. Foreseer delivers real-time anomaly detection and forecasting to identify data quality issues before they impact downstream systems. Euclid enables semantic search and advanced data categorization using vector embeddings, enhancing analysis and discoverability.

Complementing these capabilities, Striim CoPilot assists users in designing and troubleshooting data pipelines, improving efficiency and accelerating deployment. Together, these AI components enable autonomous optimization, proactive monitoring, and intelligent data management across the streaming data lifecycle.

Composable Architectures and Modular Data Services

Monolithic, one-size-fits-all data platforms are out. Flexible, composable architectures are in. That’s because flexible approaches let organizations assemble their data stack from best-of-breed, interoperable services, enabling greater agility and allowing teams to swap components in and out as business needs change. Striim supports this modern approach with a mission-critical, highly available architecture—offering active-active failover in both self-managed and fully managed environments. It also seamlessly scales both horizontally and vertically, ensuring performance and reliability as data volumes and workloads grow.

Privacy-Enhancing Technologies and Ethical Data Handling

As data privacy is increasingly front-of-mind, for regulators and consumers alike. As a result, tech and trends that protect data while it’s being used will become standard. Techniques like differential privacy, federated learning, and homomorphic encryption will allow for powerful analysis without exposing sensitive raw data, making ethical data handling a core principle of data architecture moving forward.

At Striim, we take security seriously and are committed to protecting data through robust, industry-leading practices. All data is encrypted both at rest and in transit using AES-256 encryption, and strict access controls ensure that only authorized personnel can access sensitive information. Striim undergoes regular third-party audits, including SOC 2 Type 2 evaluations, to validate our security and confidentiality practices. We are certified for SOC 2 Type 2, GDPR, HIPAA, PCI DSS 4.0 (Service Provider Level 1), and PII compliance.

Multi-Cloud Strategies and Unified Integration

Multi-cloud is already a reality for many, but the next phase is about seamless integration across clouds, not just coexistence. The trend is moving toward a unified control plane—a single platform that can manage and move data across different clouds (AWS, Azure, GCP) and on-premises systems without friction, providing a truly holistic view of the entire data landscape.

Striim is built for the multi-cloud future, enabling seamless data integration across diverse environments—not just coexistence. As organizations increasingly operate across AWS, Azure, GCP, and on-premises systems, Striim provides a unified control plane that simplifies real-time data movement and management across these platforms. By delivering continuous, low-latency streaming data pipelines, Striim empowers businesses with a holistic view of their entire data landscape, regardless of where their data resides. This frictionless integration ensures agility, consistency, and real-time insight across hybrid and multi-cloud architectures.

Real-Time Cloud Data Management Starts with Striim

As we’ve explored, effective cloud data management demands a multi-threaded approach—one that accounts for speed, intelligence, and reliability. It requires a real-time foundation to deliver on the promise of instant insights and AI-driven operations. This is where Striim provides a uniquely powerful cloud solution.

Built on a streaming-native architecture, Striim is designed from the ground up for low-latency, high-throughput data integration. With deep connectivity across legacy databases, enterprise applications, and modern cloud platforms like Google Cloud, AWS, and Azure, Striim bridges your entire data estate.

Our platform empowers you to process, enrich, and analyze data in-flight, ensuring that only clean, valuable, and AI-ready data lands in your cloud destinations. Combined with robust governance and end-to-end observability, Striim helps enterprises modernize faster, act on data sooner, and scale securely across the most complex hybrid cloud and multi-cloud environments.

Ready to activate your data? Explore the Striim platform or book a demo with one of our data experts today.