Confluent provides enterprise support and fully managed services for Apache Kafka – an open-source data streaming platform. Many enterprises use Confluent to standardize their deployment of Apache Kafka and deploy applications that leverage streaming data.

While Confluent is a well-known option for data streaming platforms, its complexity can pose significant challenges for businesses. Users often have to grapple with intricate, low-level Kafka elements like topics, brokers, partitions, taking focus away from more strategic tasks.

If you’re in the market for more user-friendly or more cost effective Confluent Alternatives, the list below is designed with you in mind.

Top 5 Confluent Alternatives

1. Striim: An end-to-end streaming platform offering connectors, change data capture, high-speed streaming SQL, and support for both historical and real-time data, along with streaming visualizations.

When to consider Striim: If you have business use cases that require stringent data SLAs for uptime, freshness, and quality, Striim’s end-to-end product provides faster implementation time and better data delivery guarantees. Learn how American Airlines went to production with global-scale Aircraft operations in just 12 weeks with Striim.

2. AWS MSK: An Apache Kafka-compatible managed streaming platform that also allows users to access other AWS services directly. AWS MSK provides significant cost savings compared to Confluent.

When to consider AWS MSK: If your company is heavily invested in Amazon Web Services (AWS) and are looking for a cost-effective solution to data streaming, AWS MSK is a popular choice.

3. Azure EventHubs: Microsoft’s solution tailored for big data and stream analytics, providing a seamless experience for Azure users. EventHubs provides significant cost savings compared to Confluent and is an ideal solution for Azure customers.

When to consider Azure EventHubs: If your company is heavily invested in Microsoft Azure, Azure Event Hubs provides cost-effective solution. It also has rich support for deployments in many regions.

4. Google Cloud PubSub: Known for its ease in handling massive real-time streams with robust scalability options.

When to consider Google Cloud PubSub: Companies with a large Google Cloud footprint will get significant value from PubSub. It also provides flexibility for fan-in and fan-out topologies across geo-regions.

5. Redpanda: An open-source distributed messaging platform that excels in performance and low-latency operations.

When to consider Redpanda: If you want a high performance, low cost implementation that is wire-compatible with Apache Kafka, Redpanda is an excellent choice. Redpanda was implemented in C++ for better control on underlying compute and storage infrastructure.

Other popular Confluent alternatives:

- Aiven: Offers a fully hosted Apache Kafka service, delivering high performance and strong consistency guarantees.

- Tibco: Tibco’s services focus mainly on data integration, analytics, event-processing, and other areas aimed at integrating and analyzing data in real-time.

- Strimzi: Strimzi offers Apache Kafka as a self-managed Kubernetes cluster.

Frequently Asked Questions

What is Confluent?

Confluent is a software company founded by the creators of Apache Kafka. Apache Kafka is an open-source stream processing software platform that was originally developed by LinkedIn and donated to the Apache Software Foundation. Confluent provides a commercial distribution of Apache Kafka, as well as additional tools and services designed to enhance Kafka’s capabilities.

What is Apache Kafka?

Apache Kafka is an open-source stream-processing software platform. Originally developed by LinkedIn and later donated to the Apache Software Foundation, it’s designed to handle real-time data feeds and is commonly used as a message broker or for building real-time data pipelines.

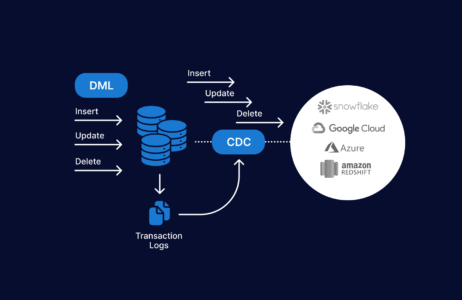

What is Change Data Capture?

Change Data Capture (CDC) is a method that efficiently identifies and captures changes in a data source. It enables you to track data updates without scanning the entire database, making it easier to replicate, sync, and transfer data between systems and offering near real-time updates. While Confluent has CDC connectors, they are not designed to support reliably emit data.

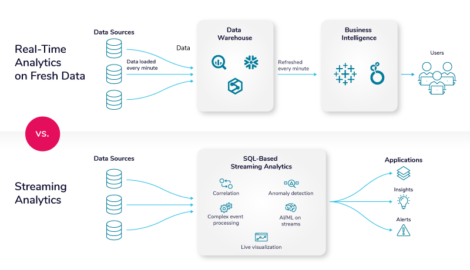

What’s the difference between Data Streaming and Stream Processing?

Data streaming refers to the continuous transfer of data at high speed, often in real-time, from a source to a destination. Stream processing, on the other hand, involves analyzing and acting on that data in real-time as it’s being transferred. While data streaming can be considered a foundational aspect of real-time data handling, stream processing adds an extra layer by performing computations or transformations on the incoming data stream.

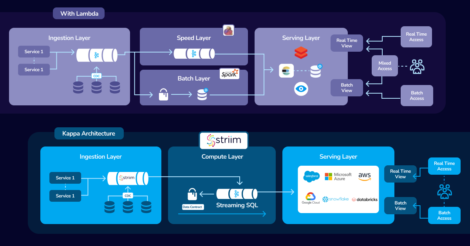

What’s a Kappa Architecture?

Kappa Architecture is a software architecture pattern used primarily for designing real-time data processing systems. Unlike the Lambda Architecture, which involves separate processing paths for real-time and batch data, the Kappa Architecture uses a single processing framework for both. The key components in Kappa Architecture are the immutable log and the stream processing layer. Events are ingested into the immutable log, from which they can be processed, transformed, and moved to serving layers for analytical or other purposes. This architecture simplifies the operational complexity and reduces the latency involved in data processing.

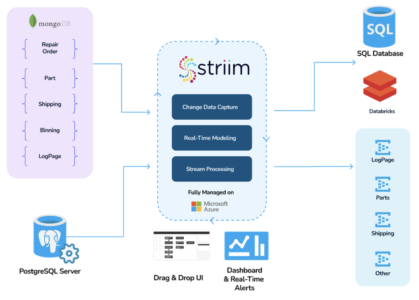

How American Airlines Achieves Operational Perfection Through Data Streaming

American Airlines is on a mission to care for people on their life journey. Serving over 5,800 flights a day to over 350 plus destinations across 60-plus countries requires massive amounts of data streaming in real-time to support flight operations.

TechOps deployed a real-time data hub consisting of MongoDB, Striim, Azure, and Databricks to ensure a seamless, real-time operation at massive scale. This architecture leverages change data capture from MongoDB to get operational data in real time, process and model the data for downstream usage, and stream it to consumers in real-time. The output is data products for TechOps and business teams to monitor and action operational data to provide a delightful travel experience to customers.

Learn more about how American Airlines ‘Striims’ their data for operational excellence.

Striim – End-to-end, unified data streaming, analytics and integration

Striim (the two i’s standing for ‘intelligent integration’) is an end-to-end data streaming platform for analytics and operations. It provides a comprehensive solution, allowing developers to build real-time streaming data pipelines quickly and easily. Striim’s cloud-native platform offers a range of features for simplifying data integration, such as automated schema mapping, data transformation and flow control. This makes it easy to get started with streaming in minutes using Striim’s free developer edition.

Unlike Confluent, which only focuses on data streaming, Striim offers over 150+ connectors with real-time data delivery guarantees. It also provides powerful visual tools that allow you to develop complete solutions from collection to delivery of streaming data. Striim has unrivaled flexibility with its REST API. This makes it easier than ever to build complex streaming pipelines and take advantage of the power of real-time analytics and operations without having to be a coding expert.

Striim also provides advanced scalability capabilities that enable it to support up to 1000 nodes per cluster and process huge volumes of data in near real time. This makes it ideal for organizations with large datasets or complex streaming needs who want access to the latest analytics insights while still maintaining tight security controls over their data. Additionally, Striim offers comprehensive customer support services so developers can always get help when they need it most.

Overall, Striim is an innovative solution for anyone looking for an end-to-end streaming platform for both analytics and operations that simplifies building real-time pipelines without sacrificing performance or security controls over their data. Whether you’re looking for an alternative to Confluent or just starting your journey into the world of stream processing, Striim could be the perfect fit for your next project.

You can use Striim Developer for free up to 10 million records per month. Alternatively you can try Striim Cloud Enterprise in our 2-week trial.