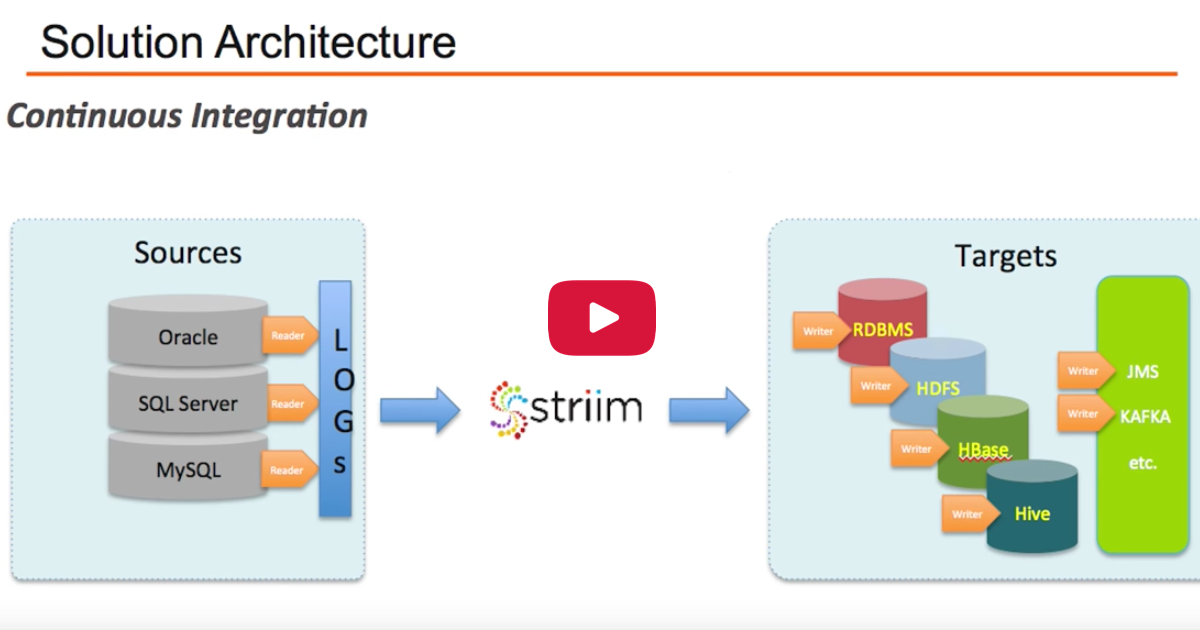

Today, let’s take a look at the Change Data Capture to HBase use case with the Striim platform. What would motivate something like this? People may wish to replicate their operational sources of data row-by-row to Hadoop Data Hub. The solution that Striim offers can apply inserts, updates and deletes through CDC to HBase, Hive or other data targets.

Why Striim? Vendors cannot easily apply inserts, updates and deletes directly to Hadoop services without custom coding. Striim can solve this problem by easily integrating CDC readers for various sources that apply data to targets. It is repeatable and very easy to create a new application and operate it. This video will show you how the Striim platform can easily accomplish Change Data Capture to HBase.

I have prepared numerous tables on Microsoft SQL Server; so through remote desktop connection, I am connecting to my SQL Server Management Studio. I have two tables that I will use for the demo. One table has the schema. My key is an integer and my value is a string, and the other table has two integers: my key and my int value. Again, my key is the primary key for both. Now on the HBase side, if I list my tables, you can see I have two. I’ll be working with Table One through the schema-less approach where I can replicate both tables on SQL Server to the same table on HBase. If I scan the table right now, I will see that the table contains about 10 values with different garbage values stored.

Next, I would like to show you the user interface for the Change Data Capture to HBase use case. There are two parts to Striim application. First, with the flow management, you can easily drag, drop, and create the sources and the targets and then define your transformations. So in this case, we’re working with SQL Server source. We are looking at two tables listed here, separated by a semicolon, along with the database credentials. We are currently working with HBase target through HBase Writer. We separately have the monitor flow that allows us to power the user interface. So we work with the dashboard that can list all metadata about the activity on the source tables, and also look at the activity by operation. It also lists high transaction lags – anything that fits the threshold profile, for example, anything longer than six seconds.

To get started, I will drive a couple of manual operations. Now on the target table, we don’t have Key 52. I will insert Key 52 to the SQL Server table. Then, when I scan the HBase table, I will see it on the other side and I can update it. I will rescan the table again. Since I applied the value for 52 that I appended, that may delete the role for this key. I will rescan again and you can see that in this row, value 52 is gone.

Finally, to show that we can also deal with data at higher volumes through my custom reader, I am going to apply 1,000 rows to my target table. I have sent deletes, and then 1,000 inserts and 1,000 updates. I have now completed over 2,010 operations in less than a second. Now if I rescan the table, I will see that all 1,000 rows have been applied.

For more information on Change Data Capture to HBase, please visit our Change Data Capture or Hadoop Integration solution pages, contact us to schedule a demo, or download the Striim platform and try it for yourself.