Ninety percent of the data generated daily is unstructured and almost meaningless to organizations. The continuous increase in the volume of data is a potential gold mine for businesses. Like gold, data has to be carefully mined and refined, or in this case, extracted and transformed to get the most value.

Businesses receive tremendous volumes of data from various sources to make better decisions. But raw data can be complex, challenging, and almost meaningless to the decision-makers in an organization. By transforming data, businesses can fully maximize the value of their data and use it to make more in-depth strategic decisions.

In this post, we’ll share an overview of data transformation, how it works, its benefits and challenges, and different data transformation approaches

- What is Data Transformation?

- Why Transform Data?

- How Data Transformation Works

- Data Transformation Methods

- Data Transformation Use Cases

- Data Transformation Challenges

- Data Transformation Best Practices

- Use Data Transformation Tools Over Custom Coding

What is Data Transformation?

Data transformation is the process of converting data from a complex form to a more straightforward, usable format. It can involve actions ranging from cleaning out data, changing data types, deleting duplicate data, data integration, and data replication, depending on the desired result.

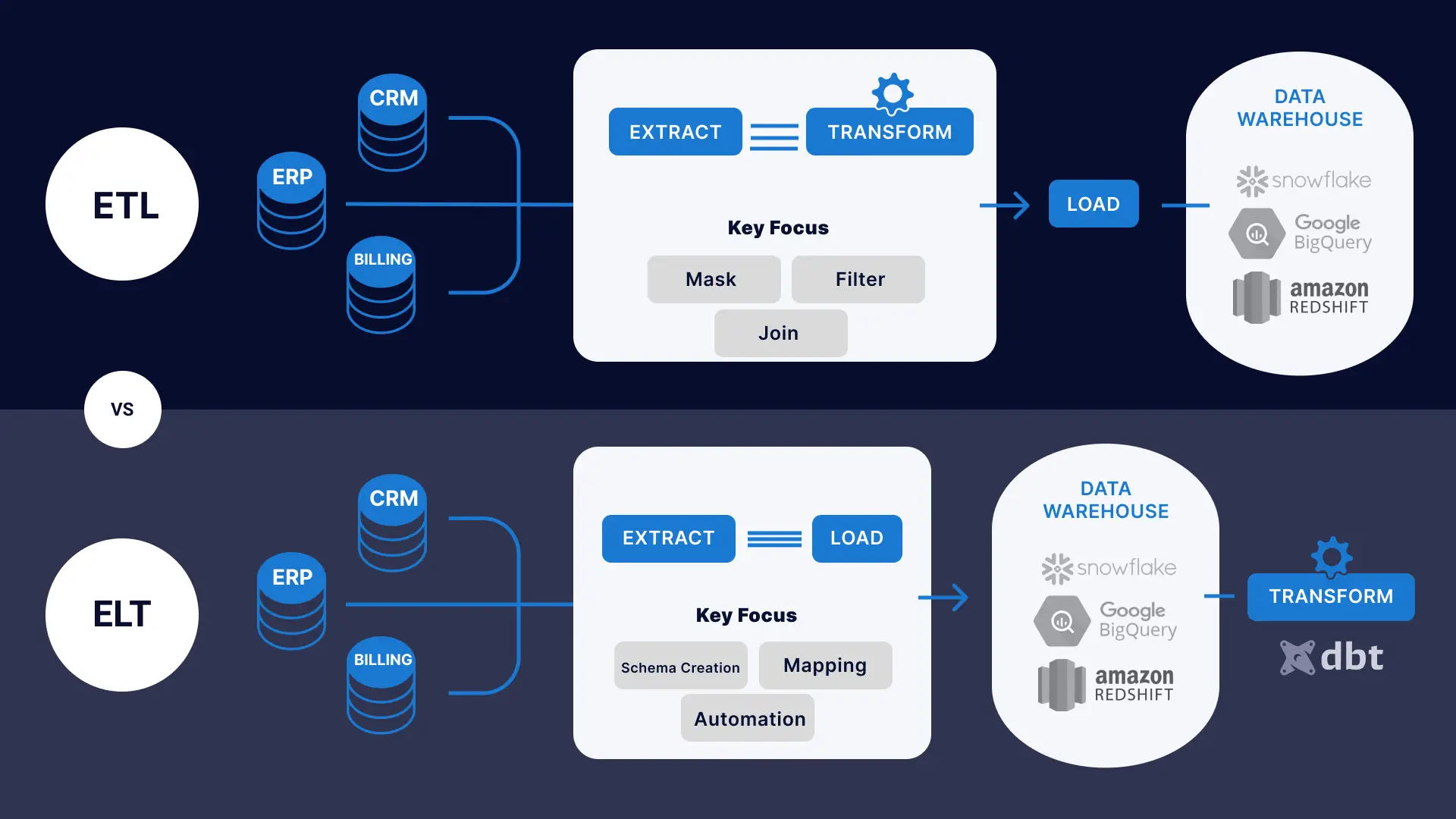

Data transformation is an integral part of any data pipeline including ETL (Extract, Transform, and Load) and ELT (Extract, Load, Transform) pipelines. ETL involves extracting data from multiple sources, transforming it into a more intelligent structure, and loading or storing it in a data warehouse. In contrast, ELT shifts the bulk of the transformations to the destination data warehouse.

Data transformation is essential to standardize data quality across an organization and create consistency in the type of data shared between systems in the organization.

Why Transform Data? (Benefits)

With data transformation, businesses can increase efficiencies, make better decisions, generate more revenue, and gain many other benefits, including:

- Higher data quality: Businesses are very concerned with data quality because it is crucial for making accurate decisions. Data transformation activities like removing duplicate data and deleting null values can reduce inconsistencies and improve data quality.

- Improved data management: Organizations rely on data transformation to handle the tremendous amounts of data generated from emerging technologies and new applications. By transforming data, organizations can simplify their data management and reduce the dreaded feeling of information overload.

- Seamless data integration: It is normal for a business to run on more than one technology or software system. Some of these systems need to transfer data between one another. With data transformation, the data sent can be converted into a usable format for the receiving system, making data integration a seamless process.

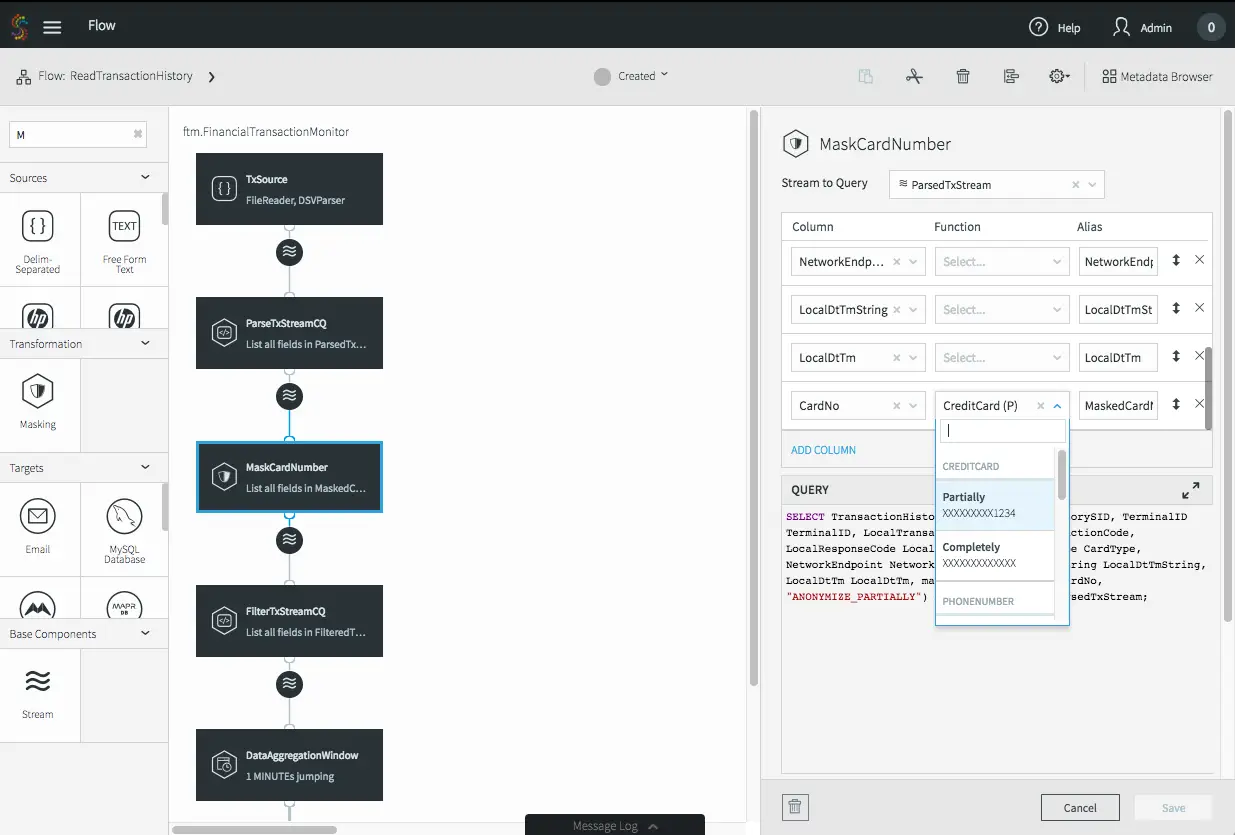

- Obfuscate sensitive data: In order to abide by GDPR, HIPAA, and other regulations, companies need to be able to mask or remove sensitive information such as PII or credit card numbers when transferring data from one system to another.

How Data Transformation Works

When data is extracted from the source, it is raw and nearly impossible to use. The data transformation process involves identifying the data, structuring it, and generating a workflow that can be executed to convert the data. Sometimes, it is mandatory to clean the data first for easy identification and mapping.

The steps to transform raw data into an intelligent format include:

- Identify the data: This is the data discovery phase, and it involves identifying and understanding the data in its source/extracted format. Data discovery is usually best accomplished with the help of a data profiling tool. Here, you have an idea of what should be done to get the data into the desired format.

- Structure the data: This is the data mapping stage where the actual transformation process is planned. Here, you define how the fields in the data are connected and the type of transformation they will need. This stage is also where you consider if there would be any loss of data in the transformation process. For example, if we have a simple Excel spreadsheet with a date column in an incorrect format, we would want to make a ‘mapping’ to determine the type of transformation needed to change the date to a correct format.

- Generate a workflow: For transformation to occur, a workflow or code needs to be generated. You can write your custom code or use a data transformation tool. Python and R are the most common languages for a custom code, but it can be done in any language, including an Excel Macro, depending on the transformation needs. When developing a transformation workflow, consider certain factors like scalability (will the transformation needs change over time?) and usability (will other people need this workflow?).

- Execute the workflow: Here, data is restructured and converted to the desired format.

- Verify the data: After transformation, it is best to check if the output is in the expected format. If it isn’t, review the generated workflow, make necessary changes, and try again.

Data Transformation Methods

Organizations can transform data in different ways to better understand their operations. For example, data aggregation, data filtering, data integration, etc., are all forms of data transformation, and they can happen with any of these types of data transformation:

Transformation with scripting

This type of transformation involves manually coding the data transformation process from start to finish in Python, R, SQL, or any other language. It is an excellent approach for customization but often results in unintentional errors and misunderstandings as developers sometimes fail to interpret the exact requirements in their custom-coded solutions.

Transformation with on-site ETL tools

These tools work through on-site servers to extract, transform, and load information into an on-site data warehouse. However, an on-site transformation solution can be expensive to set up and manage as data volume increases, so big data companies have moved to more advanced cloud-based ETL tools.

Transformation with cloud-based ETL tools

Cloud-based ETL tools have simplified the process of data transformation. Instead of working on an on-site server, they work through the cloud. In addition, these tools make it easier to link cloud-based platforms with any cloud-based data warehouse.

Transformations within a data warehouse

Instead of ETL (where data is transformed before it’s loaded into a destination) ELT shifts the transformations to the data warehouse. dbt (data build tool) is a popular development framework that empowers data analysts to transform data using familiar SQL statements in their data warehouse of choice.

Data Transformation Use Cases

Data Preparation and Cleaning for ELT Pipelines

In ELT, users load data into the data warehouse in its raw form and apply transformations in the DW layer using stored procedures and tools like dbt. However the raw data can have many issues preventing it from being actionable.

Data Transformation can address the following issues:

- The raw data may have lookup IDs instead of human readable names, for example like this:

| User ID | Page Viewed | Timestamp |

| 12309841234 | Homepage | 3:00 pm UTC July 30,2021 |

| 12309841235 | Request a demo | 4:00 pm UTC July 30,2021 |

- Instead of this:

| Username | Page Viewed | Timestamp |

| Jane Strome | Homepage | 3:00 pm UTC July 30,2021 |

| John Smith | Request a demo | 4:00 pm UTC July 30,2021 |

- De-duplication of redundant records

- Removing null or invalid records that would break reporting queries

- Transforming your data to fit machine learning training models

Business Data Transformations for Operational Analytics

Business and analytical groups may have some north star metrics that can only be tracked by correlating multiple data sets. Data transformation jobs can join data across multiple data sets and aggregate it to create a unified view of this data. Examples of this include:

- Joining unique customer records across multiple tables – your customer Jane Smith exists in your CRM, your support ticketing system, your website analytics system (e.g. Google Analytics), and your invoicing system. You can write a transform to create a unified view of all her sales and marketing touchpoints and support tickets to get a 360 view of her customer activity.

- Creating aggregate values from raw data – for instance you can take all your invoices and transform them to build a table of monthly revenue categorized by location or industry

- Creating analytical tables – it may be possible for your data analyst to write complex queries to get answers to simple questions. Alternatively your data engineering team can make life easy for data analysts by pre-creating analytical tables. That will make your analysts’ job as easy as writing a ‘select * from x limit 100’ to generate a new report and drive down compute costs in your warehouse. For example:

Sales Data Table:

| Customer | Invoice Amount | Invoice Date |

| ACME | $100 | 3:00 pm UTC July 30,2021 |

| MyShop | $200 | 1:00 pm UTC July 29, 2021 |

And Monthly Sales (analytical table):

| Month | Invoice Amount |

| July | $500 |

| August | $200 |

Data Transformation Challenges

Data transformation has many benefits to an organization, but it is also important to note that certain hurdles make data transformation difficult.

Data transformation can be a costly process. ETL tools come at a price, and training staff on data management processes is not exactly a walk in the park either. The cost of data transformation depends on infrastructure size. Extensive infrastructure will require hiring a team of data experts to oversee the transformation process.

To transform data effectively, an organization would have to set up tools, train its current employees, and/or hire a new set of experts to oversee the process. Either way, it is going to cost time and resources to make that happen.

Data Transformation Best Practices

The data transformation process seems like an easy step-by-step workflow, but there are certain things to keep in mind to avoid running into blockers or carrying out the wrong type of data transformation. The following are data transformation best practices:

- Start by designing the target format. Jumping right into the nitty-gritty of data transformation without understanding the end goal is not a good idea. Communicate with business users to understand the process you are trying to analyze and design the target format before transforming data into insights.

- Profile the data. In other words, get to know the data in its native form before converting it. It helps to understand the state of the raw data and the type of transformation required. Data profiling enables you to know the amount of work required and the workflow to generate for transformation.

- Cleanse before transforming. Data cleaning is an essential pre-transformation step. It reduces the risk of having errors in the transformed data. Data can have missing values or information that is irrelevant to the desired format. By cleansing your data first, it increases the accuracy of the transformed data.

- Audit the data transformation process. At every stage of the transformation process, track the data and changes that occur. Auditing the data transformation process makes it easier to identify the problem source if complications arise.

Use Data Transformation Tools Over Custom Coding

Data transformation tools are more cost-effective and more efficient than custom coding. Writing codes for data transformation functions have a higher risk of inefficiencies, human error, and excessive use of time and resources.

Data transformation tools are usually designed to execute the entire ETL process. If you are not using tools for the transformation process, you’ll also need to worry about the “extraction” and “loading” steps. Custom coding allows for a fully customized data transformation solution, but as data sources, volumes, and other complexities increase, scaling and managing this becomes increasingly difficult.

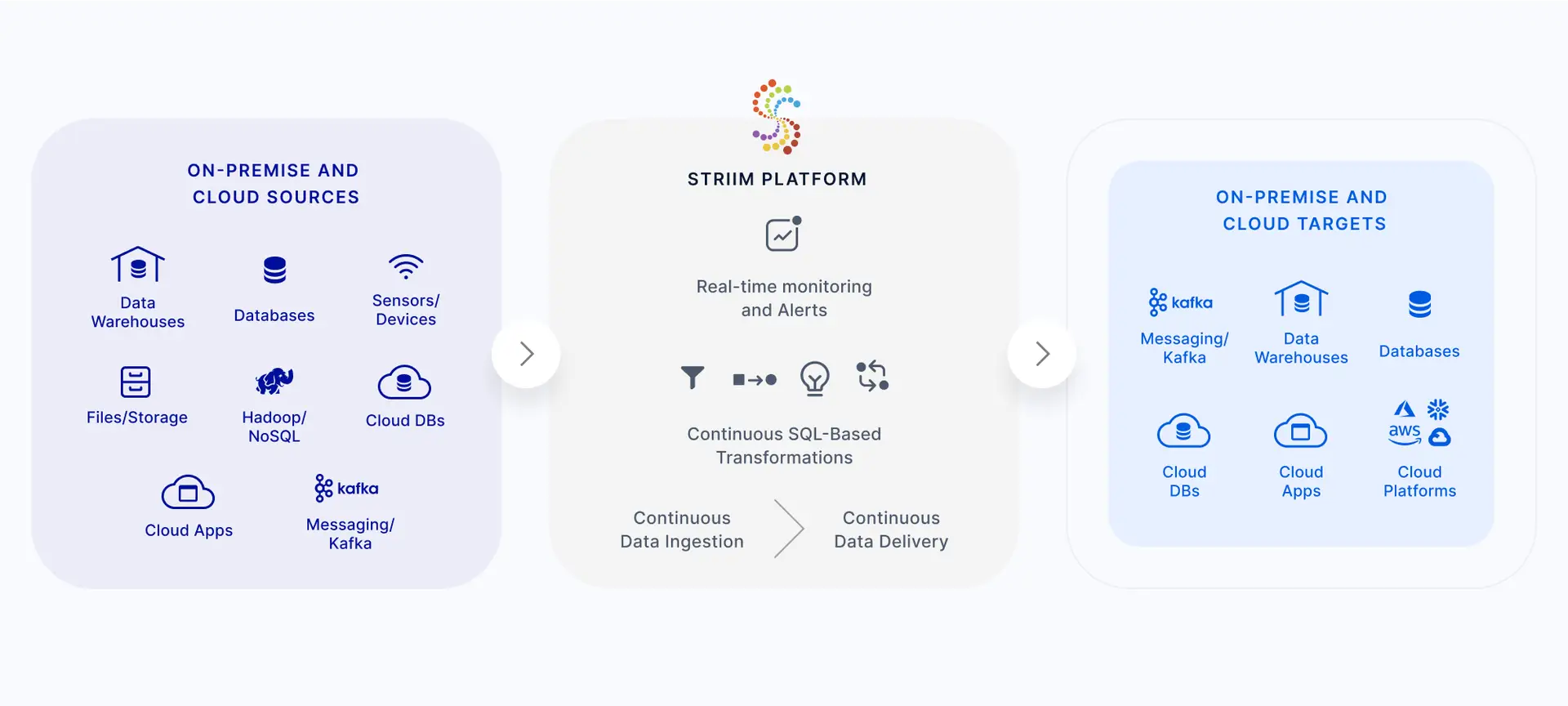

Striim is an end-to-end data integration solution that offers scalable in-memory transformations, enrichment and analysis, using high-speed SQL queries. To get a personalized walkthrough of Striim’s real-time data transformation capabilities, please request a demo. Alternatively, you can try Striim for free.