Change Data Capture (CDC) is a data integration process that identifies and tracks changes to data in a source database. These changes are then delivered in real time to a target system, such as a data warehouse or data lake.

‘Next-day data’ is obsolete data. In a world that runs on instant personalization and intelligent operations, relying on data that’s hours or days old is no longer a viable strategy.

Take batched data. Batch-based ETL (Extract, Transform, Load) processes, which run on schedules, introduce significant latency. By the time data is extracted, processed, and loaded into a target system, it’s already hours or even days old. This inherent delay creates a gap between when an event happens and when you can act on it, making it impossible for organizations to react in the moments that matter.

Enter Change Data Capture (CDC). Change Data Capture (CDC) has emerged as an ideal solution for near real-time movement of data from relational databases (like SQL Server or Oracle) to data warehouses, data lakes, or other databases.

In this post, we’ll explore what Change Data Capture is, how it works, the different types of CDC methodology, and its key use cases and benefits. You’ll end with an understanding of how to make a success of your CDC strategy, which tools to use, and how CDC can drive real-time, trusted, unified data for your mission-critical applications. Let’s dig in.

What is Change Data Capture (CDC)?

Change Data Capture is a software process that identifies and tracks changes to data in a database. CDC provides real-time or near-real-time movement of data by moving and processing data continuously as new database events occur.

In a fast-paced organization, waiting for batched data isn’t just inefficient—it’s a competitive disadvantage. CDC solves this by eliminating the latency inherent in traditional data movement, ensuring that analytics, applications, and cloud platforms are always powered by the freshest data available. Beyond just copying data, CDC enables a continuous, low-impact flow of information that keeps disparate systems perfectly in sync.

Known for its efficiency and timeliness, CDC has become a foundational technology for real-time data architectures. It is the engine behind modern streaming data pipelines, enabling critical initiatives like zero-downtime cloud migrations, real-time analytics, and the continuous feeding of data lakes and lakehouses.

How Does Change Data Capture Work?

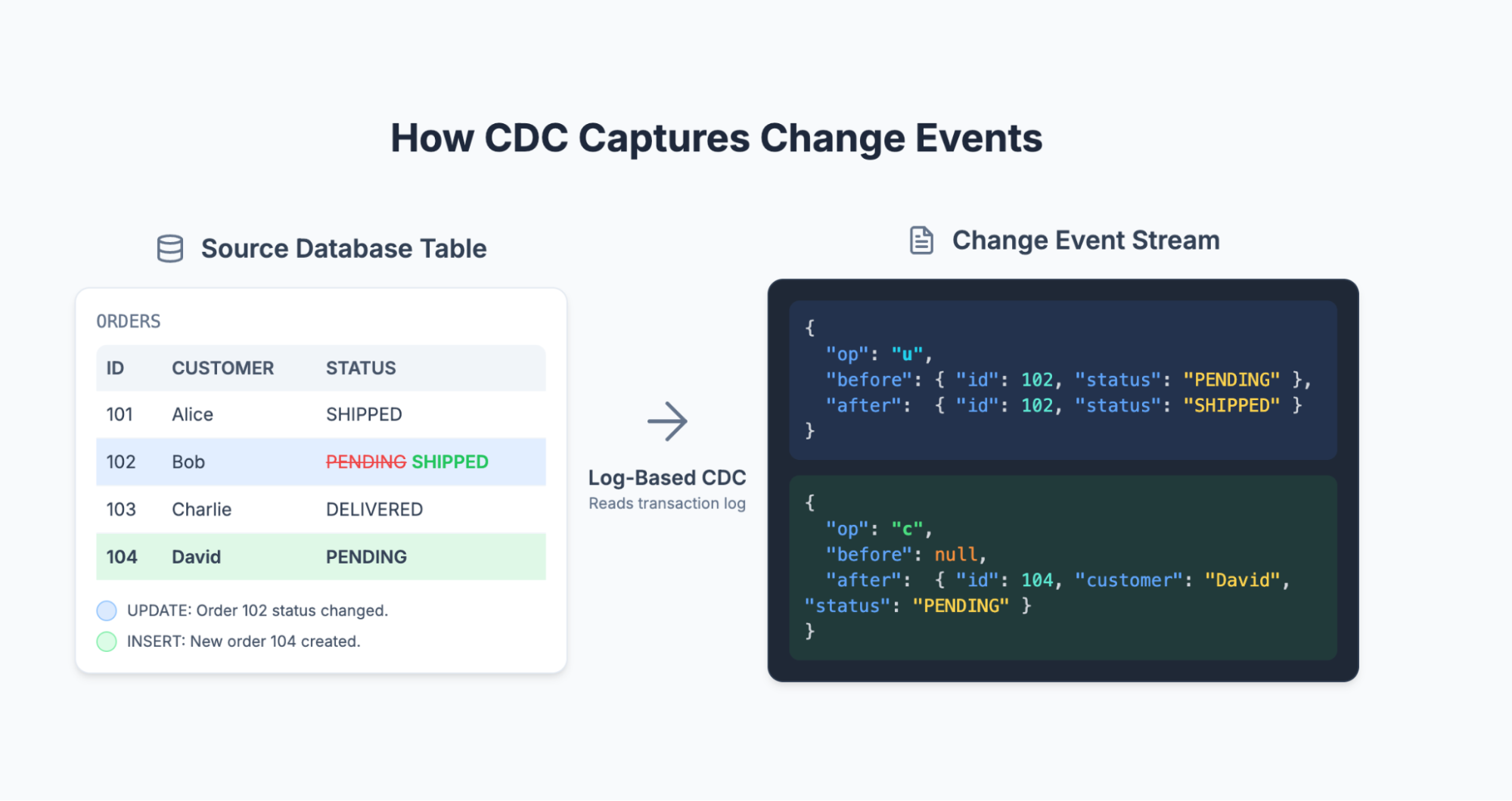

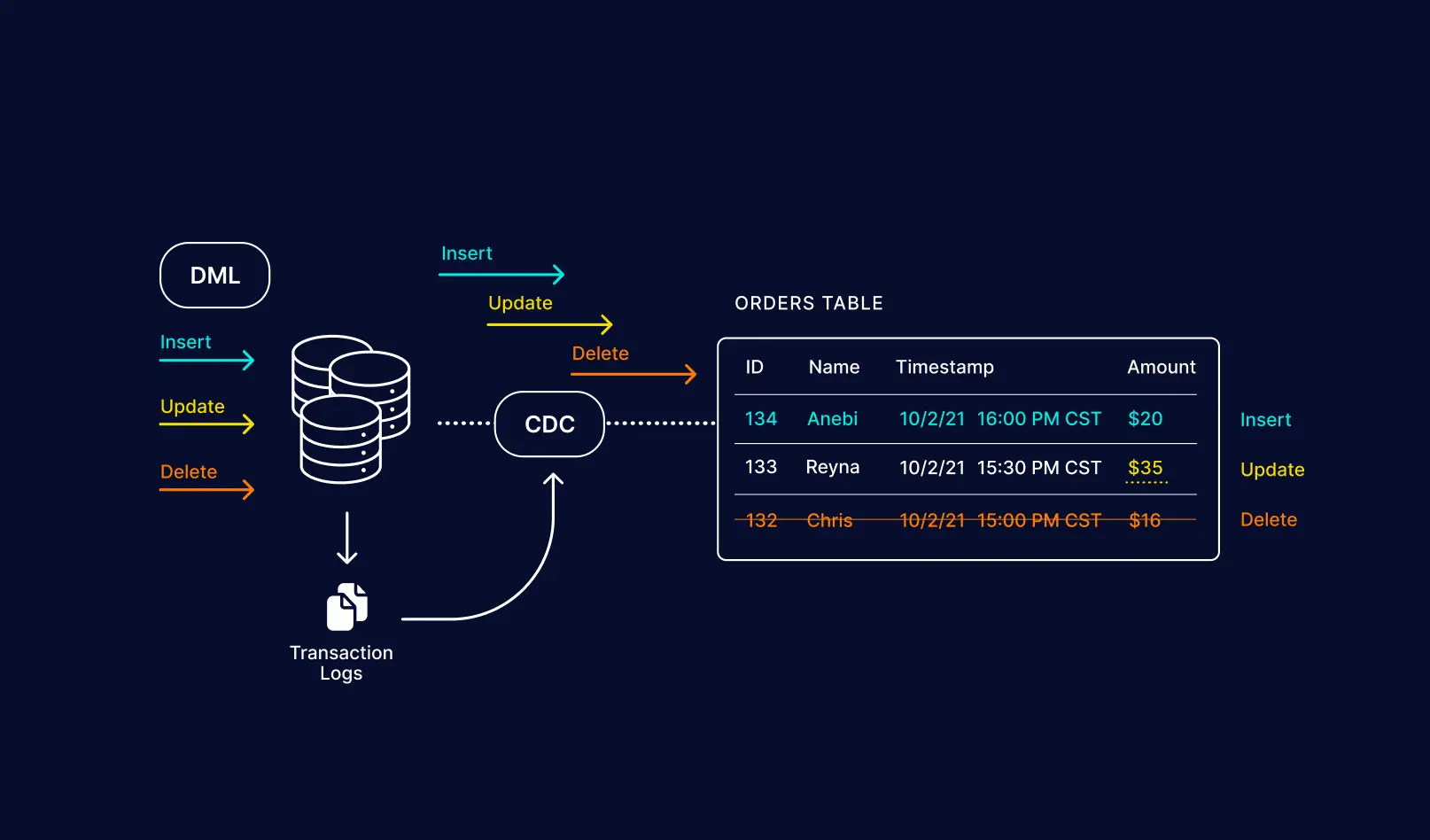

At its core, Change Data Capture works by continuously monitoring a database for changes, capturing those changes in real time, and delivering them as a stream of events to downstream systems or platforms. While the concept is straightforward, implementation can be technically complex. For instance, the method used to detect and capture changes has significant implications for performance, reliability, and impact on the source system.

Every time a user or application performs an action, the database records it as an insert, update, or delete event. CDC techniques hook into the database to detect these changes as they happen. The most common methods include querying tables for timestamp changes, using database triggers that fire on a change, or reading directly from the database’s transaction log. Each approach comes with trade-offs in terms of performance impact, latency, and implementation complexity, which we’ll explore in more detail later.

What Happens After Change Capture?

Once a change event is captured, it isn’t just sent to a new destination. Modern CDC pipelines stream these events to targets like cloud data warehouses (Redshift, Snowflake, BigQuery), data lakes, streaming platforms, and lakehouses (Databricks). More importantly, the data can be processed in-stream before it ever lands in the target. This enables you to filter out irrelevant data, enrich events with additional context, or transform data into the exact format a downstream application needs—all with sub-second latency. As a result, the data arriving at its destination is not only fresh but also immediately usable.

Types of CDC Methods

Change Data Capture isn’t a one-size-fits-all. The way CDC is implemented will look different for each company, according to their individual use cases and aspirations. Each method comes with its own trade-offs around performance, complexity, and the strain it places on your source systems. Understanding these differences is key to choosing the best approach for your and your organization’s needs.

Audit Columns

By using existing “LAST_UPDATED” or “DATE_MODIFIED” columns, or by adding them if not available in the application, you can create your own change data capture solution at the application level. This approach retrieves only the rows that have been changed since the data was last extracted.

The CDC logic for the technique would be:

Step 1: Get the maximum value of both the target (blue) table’s ‘Created_Time’ and ‘Updated_Time’ columns

Step 2: Select all the rows from the data source with ‘Created_Time’ greater than (>) the target table’s maximum ‘Created_Time’ , which are all the newly created rows since the last CDC process was executed.

Step 3: Select all rows from the source table that have an ‘Updated_Time’ greater than (>) the target table’s maximum ‘Updated_Time’ but less than (<) its maximum ‘Created_Time’. The reason for the exclusion of rows less than the maximum target create date is that they were included in step 2.

Step 4: Insert new rows from step 2 or modify existing rows from step 3 in the target.

Pros of this method:

- It can be built with native application logic

- It doesn’t require any external tooling

Cons of this method:

- Adds additional overhead to the database

- DML statements such as deletes will not be propagated to the target without additional scripts to track deletes

- Error prone and likely to cause issues with data consistency

This approach also requires CPU resources to scan the tables for the changed data and maintenance resources to ensure that the DATE_MODIFIED column is applied reliably across all source tables.

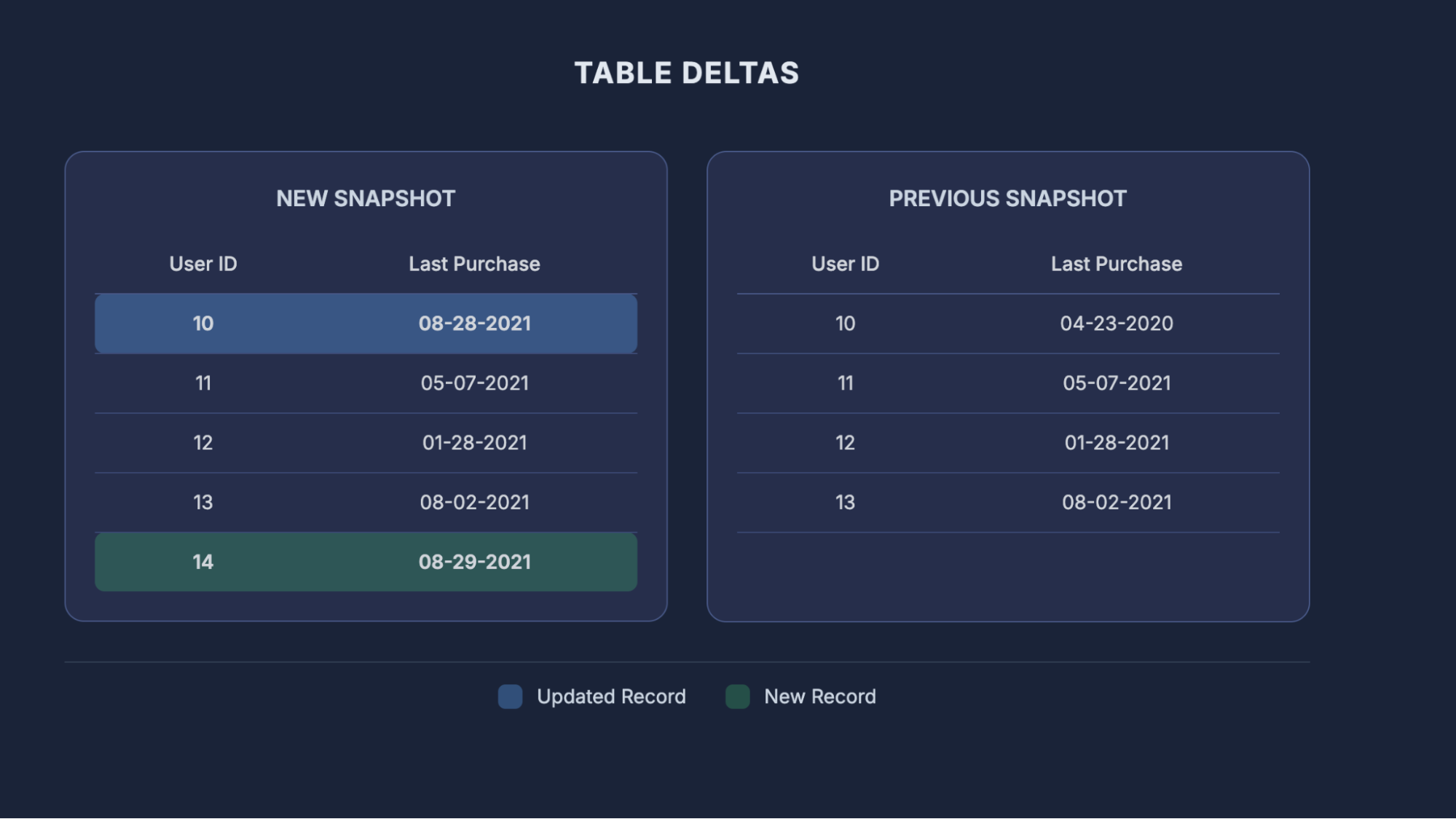

Table Deltas

You can use table delta or ‘tablediff’ utilities to compare the data in two tables for non-convergence. Then you can use additional scripts to apply the deltas from the source table to the target as another approach to change data capture. There are several examples of SQL scripts that can find the difference between two tables.

Advantages of this approach:

- It provides an accurate view of changed data while only using native SQL scripts

Disadvantage of this approach:

- Demand for storage significantly increases because you need three copies of the data sources that are being used in this technique: the original data, previous snapshot, and current snapshot

- It does not scale well in applications with heavy transactional workloads

Although this works better for managing deleted rows, the CPU resources required to identify the differences are significant and the overhead increases linearly with the volume of data. The diff method also introduces latency and cannot be performed in real time.

Some log-based change data capture tools come with the ability to analyze different tables to ensure replication consistency.d

Query-Based CDC

Also known as polling-based CDC, this method involves repeatedly querying a source table to detect new or modified rows. This is typically done by looking at a timestamp or version number column that indicates when a row was last updated.

While simple to set up, this method is highly inefficient. It puts a constant, repetitive load on the source database and can easily miss changes that happen between polls. More importantly, it can’t capture DELETE operations, as the deleted row is no longer there to be queried. For these reasons, query-based CDC is rarely used for production-grade, real-time pipelines.

Trigger-Based CDC

This method uses database triggers—specialized procedures that automatically execute in response to an event—to capture changes. For each table being tracked, INSERT, UPDATE, and DELETE triggers are created. When a change occurs, the trigger fires and writes the change event into a separate “history” or “changelog” table. The CDC process then reads from this changelog table.

The main drawback here is performance overhead. Triggers add computational load directly to the database with every transaction, which can slow down source applications. Triggers can also be complex to manage, especially when dealing with schema changes, and can create tight-coupling between the application and the data capture logic. This makes them difficult to scale and maintain in high-volume environments.

Log-Based CDC

Log-based CDC is widely considered the gold standard for modern data integration. This technique reads changes directly from the database’s native transaction log (e.g., the redo log in Oracle or the transaction log in SQL Server). Since every database transaction is written to this log to ensure durability and recovery, it serves as a complete, ordered, and reliable record of all changes.

The key advantage of log-based CDC is its non-intrusive nature. It puts almost no load on the source database because it doesn’t execute any queries against the production tables. It works by “tailing” the log file, similar to how the database itself replicates data. This makes it highly efficient and scalable, capable of capturing high volumes of data with sub-second latency. This reliability and low-impact approach is why modern, enterprise-grade streaming platforms like Striim are built around a scalable, streaming-native, log-based CDC architecture.

Now let’s move from theory to execution. How can organizations leverage CDC to power better outcomes?

Key Use Cases for Change Data Capture

Change Data Capture can power a wide range of business-critical initiatives, from real-time analytics to application-level intelligence, and is a key enabler of cloud modernization. By delivering a continuous stream of fresh data, CDC opens up new opportunities for speed and insight.

Real-Time Analytics and Reporting

Stale data leads to bad decisions. CDC solves this by feeding dashboards, operational KPIs, and business intelligence (BI) tools with the most up-to-date information possible. This allows teams in finance, marketing, and a variety of other functions to monitor performance, spot trends, and react to issues in real time, rather than waiting for overnight batch reports. Striim accelerates this with low-latency, high-throughput delivery to leading analytics platforms like Snowflake and BigQuery.

Cloud Migration and Hybrid Sync

CDC is essential for zero-downtime cloud migrations. It allows organizations to replicate data from on-premises databases to cloud targets without interrupting live applications. After the initial load, CDC keeps both on-prem and cloud-based systems in sync. This enables a seamless cutover once the systems are fully validated. Striim’s hybrid-ready architecture is built for these scenarios, ensuring reliable, real-time data flow between any combination of on-prem, cloud, and multi-cloud environments.

Data Lakehouse Hydration

Modern data lakehouses on platforms like Databricks and Snowflake thrive on fresh, operational data. CDC is the most efficient way to “hydrate”, i.e., continuously update these platforms by streaming transactional data from source systems. This decouples storage and compute, enabling organizations to leverage the cost and scalability benefits of the cloud while ensuring their analytics and data science initiatives are built on a foundation of timely, accurate data.

Operational Intelligence and Real-Time AI

Low-latency data from CDC can power intelligent applications that trigger automated actions. For example, a real-time stream of customer transaction data can be fed into an AI/ML fraud detection model, allowing it to block a suspicious transaction before it’s completed. This goes beyond traditional BI, using streaming data to drive immediate business outcomes. With its built-in capabilities for in-flight data processing and enrichment, Striim is designed to power these sophisticated streaming analytics and AI-driven workloads.

Benefits of Using CDC

Adopting Change Data Capture isn’t just a technical optimization—it delivers significant business value. By fundamentally changing how data flows through the enterprise, CDC brings tangible benefits in timeliness, efficiency, reliability, and scale.

Timeliness: Fresh Data, Faster Decisions

By making data useful the instant it’s born, CDC eliminates the costly delay between a business event and the ability to act on it. This is critical in industries like finance (for real-time fraud detection), retail (for live inventory management), and logistics (for dynamic supply chain adjustments). With fresh data, businesses can shift from reactive analysis to proactive decision-making.

Efficiency: Reduced Load on Source Systems

Unlike full batch extracts that repeatedly query entire tables and consume massive database resources, CDC is far more efficient. Log-based CDC, in particular, has a minimal impact on source systems because it reads from the transaction log—a process that production applications are already performing. This frees up valuable system resources and ensures that data-intensive analytics don’t slow down critical operational workloads.

Reliability: Reduced Risk of Data Loss or Mismatch

Because CDC captures every single change event in order, it ensures transactional integrity and consistency between source and target systems. Advanced platforms build on this with features like exactly-once processing guarantees and automated error handling, which prevent data loss or duplication. This level of reliability is essential for financial reporting, compliance, and other use cases where data accuracy is non-negotiable.

Scalability: Built for Modern Architectures

Modern data ecosystems are defined by high volume and distribution. CDC is inherently built to handle this scale. Its event-driven nature aligns perfectly with microservices and other distributed architectures, allowing organizations to build robust, high-throughput data pipelines that can grow with the business without becoming brittle or slow.

The benefits of CDC in the modern enterprise context are clear. So, how can you pull off a successful CDC strategy?

Best Practices for CDC Success

Adopting CDC is the first step, but building resilient, scalable, and governable real-time pipelines requires a strategic approach. Teams often face challenges with brittle pipelines, limited observability, and unexpected schema changes. By following best practices, you can avoid these pitfalls and build a data architecture that’s built for scale.

Design for Change

Source schemas are not static. A new column is added, a data type is changed, or a table is dropped—any of these can break a brittle pipeline. Instead of fearing schema drift, plan for it. Use tools that can automatically detect schema changes at the source and propagate them to the target, or use a schema registry to manage different versions. Platforms like Striim can detect and handle schema drift gracefully, preventing pipeline failures and ensuring data integrity without manual intervention.

Build for Scale

As your business grows, so will your data volume. Design your pipelines with an event-driven, microservices-aligned architecture in mind. This means building loosely coupled services that can be scaled independently. Use a durable buffer like Kafka to decouple your source from your targets, allowing you to stream data to multiple destinations without putting additional strain on the source system.

Embrace the “Read Once, Stream Anywhere” Pattern

This powerful pattern, central to Striim’s architecture, is key to building efficient and reusable pipelines. Instead of creating a separate, point-to-point pipeline for every new use case (which adds load to your source database), you capture the change stream once. That single, unified stream can then be processed, filtered, and delivered to any number of targets simultaneously—from data warehouses and lakehouses to real-time applications and monitoring systems. This reduces source system impact, simplifies management, and eliminates redundant data pipelines.

Monitor and Validate Continuously

You can’t manage what you can’t see. End-to-end observability is crucial for maintaining trust in your data pipelines. Implement continuous monitoring to track latency, throughput, and system health. Go beyond simple monitoring with automated data validation and anomaly detection to ensure the data flowing through your pipelines is accurate and complete. Striim offers built-in dashboards and real-time alerts to provide this critical observability out of the box.

Ensure Governance and Data Quality

Real-time data pipelines must adhere to your organization’s broader data governance policies. This includes implementing access controls, maintaining detailed audit trails, and encrypting data both in-transit and at-rest. Integrate data quality checks directly into your pipelines to identify and handle issues before they impact downstream systems. Enterprise-ready platforms should support these governance requirements, helping you maintain security and compliance across your data infrastructure.

Choosing the Right CDC Tool

With so many options available, it can be hard to navigate the CDC vendor landscape. Tools range from open-source frameworks to managed cloud services, each with its different strengths. The key is to look beyond basic replication and evaluate solutions based on the criteria that matter for enterprise workloads: latency, reliability, scalability, and ease of use.

The market generally falls into a few categories:

- Open-Source Frameworks: Tools like Debezium are powerful and flexible but require significant development effort to implement and manage.

- ELT Tools with CDC Add-ons: Many traditional ELT vendors have added CDC features, but they are often batch-oriented and struggle with true, real-time, high-volume streaming.

- Cloud-Native Migration Tools: Services like AWS DMS are excellent for their specific purpose (migrating data into their cloud) but lack the flexibility, processing capabilities, and observability needed for broader enterprise use.

- Unified Streaming Platforms: A unified platform like Striim combines log-based CDC, in-stream processing, and real-time delivery into a single, cohesive solution designed for mission-critical workloads.

Striim vs. Debezium

Debezium is a popular open-source CDC project that provides connectors for various databases. While it’s a great choice for development teams that need a flexible, code-centric tool, it lacks the enterprise-grade features required for production deployments. Operating Debezium at scale requires building and managing a complex ecosystem of other tools (like Kafka Connect) and lacks a user interface, built-in monitoring, governance features, or dedicated support. Striim, in contrast, provides a fully integrated, enterprise-ready platform with an intuitive UI, end-to-end observability, and robust governance, dramatically lowering the total cost of ownership and accelerating time to market.

Striim vs. Fivetran

Fivetran is a leader in the ELT space, excelling at simplifying batch data movement into cloud data warehouses for BI. However, its CDC capabilities are geared toward “mini-batch” updates, not true real-time streaming. This approach introduces latency that isn’t well-suited for operational use cases like real-time fraud detection or inventory management. Striim is a streaming-native platform built for high-volume, low-latency scenarios. Its ability to perform complex transformations and enrich data in-flight—before it lands in the target—is a key differentiator, enabling a much broader range of real-time applications beyond just BI.

Striim vs. AWS DMS

AWS Database Migration Service (DMS) is a valuable tool for its core purpose: migrating databases into the AWS cloud. While it uses CDC, it is primarily a replication tool, not a full-fledged streaming data platform. It has limited capabilities for in-stream data processing and lacks the comprehensive observability, data validation, and governance features needed to manage enterprise-wide data pipelines. Striim offers a more powerful and flexible solution that supports hybrid and multi-cloud environments, providing a single platform for data integration and streaming analytics with full pipeline visibility.

Why Leading Enterprises Choose Striim for Change Data Capture

Modern business runs in real time, and basic CDC is no longer enough. Leading enterprises need a unified platform that seamlessly combines real-time data capture with in-stream processing and reliable, at-scale delivery. Striim is the only platform that provides this end-to-end functionality in a single, enterprise-grade solution.

With Striim, organizations get:

- Enterprise-Grade Capabilities: Features like built-in data validation, high availability, and end-to-end observability are not add-ons; they are core to the platform, ensuring your mission-critical pipelines are resilient and trustworthy.

- A True Hybrid and Multi-Cloud Solution: Striim is designed to operate anywhere your data lives. With broad support for on-premise and all major cloud platforms, Striim gives you the flexibility to build and manage data pipelines across any environment without being locked into a single vendor.

- More than just CDC: Striim goes beyond simple replication, allowing you to transform, enrich, and analyze data in-flight. This means you can power not just real-time analytics, but also sophisticated, AI-driven applications from the same data stream.

Don’t settle for stale data. See how the world’s leading enterprises are using Striim to power their business with real-time insights.