Following on from Part 1 of this two-part blog series on the evolution of the traditional batch ETL to a real-time streaming ETL, I would like discuss how Striim, a patented streaming data integration software, supports this shift by offering fast-to-deploy real-time streaming ETL solutions for on-premises and cloud environments.

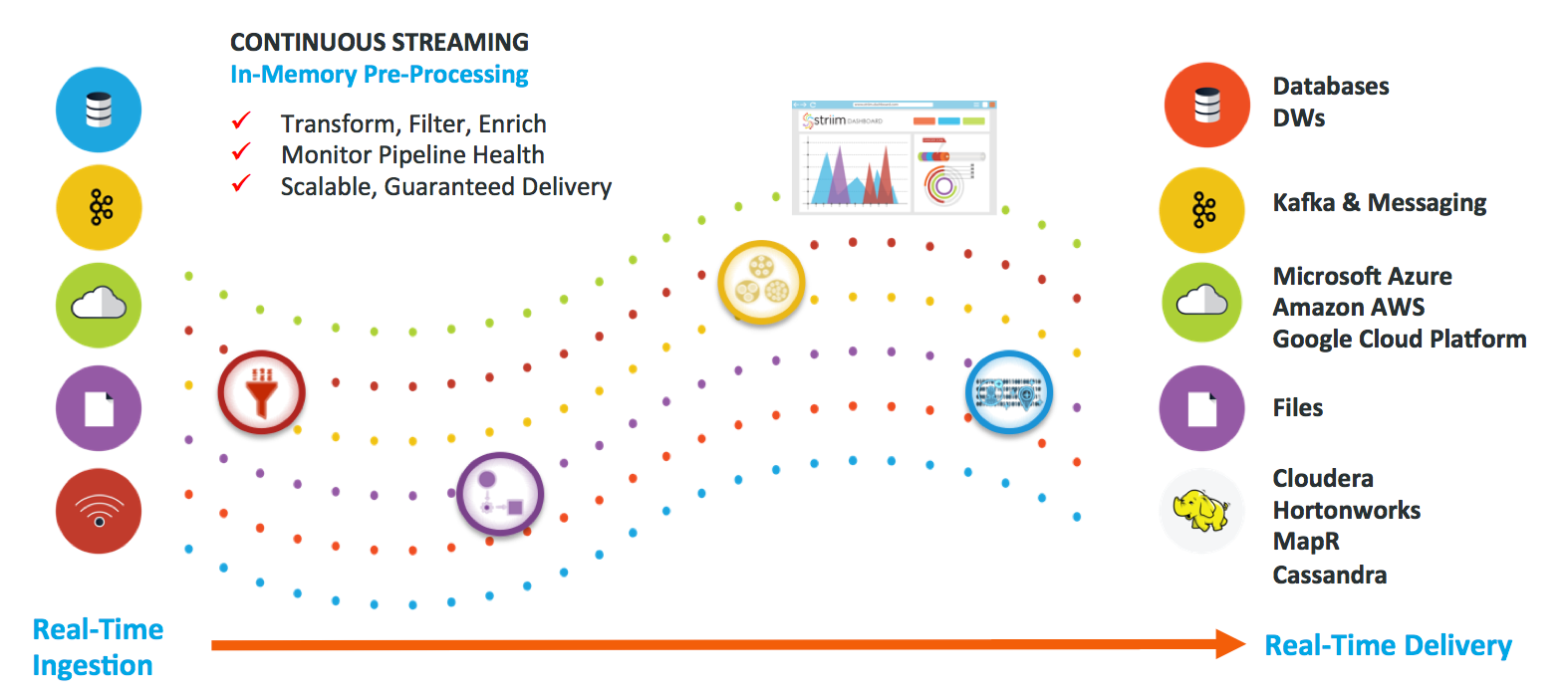

- Real-time low-impact data ingestion from relational databases using CDC, and from non-relational database sources such as log files, IoT sensors, Hadoop, MongoDB and Kafka

- In-memory, SQL-based in-line data transformations, such as denormalization, and enrichments before delivering the data in a consumable format and with sub-second latency

- Delivery to relational databases, Kafka and other messaging solutions, Hadoop, NoSQL, files, on-premises or in the cloud in real time

- Continuous monitoring of data pipelines and delivery validation for zero data loss

- Enterprise-grade solutions with built-in exactly once processing, security, high-availability and scalability using a patented distributed architecture

- Fast time to market with wizards-based, drag and drop UI

As a result of this shift to real-time streaming ETL, businesses can now offload their high-value, operational workloads to cloud because they can easily stream their on-premises and cloud-based data to cloud-based analytics solutions in real time and in the right format. They can also rapidly build real-time analytics applications on Kafka, NoSQL or Kudu that use real-time data from a wide range of sources. These real-time analytics applications help them capture perishable, time-sensitive insights that improve customer experience, manage risks and SLAs effectively, and improve operational efficiencies in their organizations.

From an architecture perspective, streaming ETL with distributed stream processing not only reduces complexity in ensuring high availability but also in enabling consistent data states in the event of a process failure. Because Striim manages data flows end-to-end and in memory, after an outage it is able to ensure data processing and delivery is consistent across all flows so there is no missing data or out-of-sync situation in the target systems.

Change is the only constant in our lives and in the technologies we work with. The evolution from traditional ETL to streaming ETL supports digital businesses to effectively meet their customers’ needs and have a competitive edge. I invite you to have a conversation with us about using streaming ETL in your organization by scheduling a technical demo with one of our experts. You can also download Striim for free to have a first-hand experience of its broad range of capabilities.