Data strategy transcends all use cases. Are you tasked with enhancing customer experience or ensuring SLAs? Do you monitor infrastructure, equipment, or replication between databases? Do you need to detect fraud or security issues in real time? Or does your business generate extreme volumes of IoT data?

There is a general approach for all of these use cases which Steve Wilkes, Striim Founder and CTO, covered in his recent Strata+Hadoop World presentation.

https://youtu.be/c8JsXX909_o

In general, you need current, accurate and complete data in order to make sound decisions. If there is a single takeaway from Steve’s talk, he’d like people to “Think Streaming First!” because if you go batch, you can’t go back. Don’t boil the ocean and try to cover everything in one go. And please reconsider dumping all your data into a data lake, because that’s a recipe for failure.

If you plan ahead and do it methodically – taking one stream at a time no matter what use case you are trying to solve – you will be able to reap the benefits of streaming data. Here are the five steps Steve recommends to make your data strategy work through streaming.

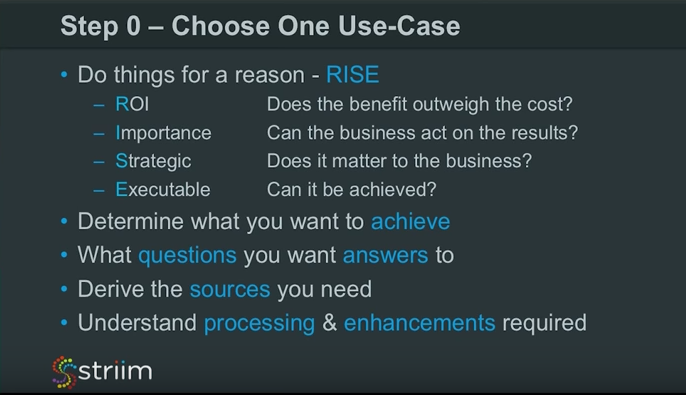

Step 0 – One Use-Case

There’s a business reason for all use cases. People need to determine if the benefits to the business outweigh how much it will cost to implement. Next, if results are generated, is the business in a position to actually act on those results? The business needs to determine if this is strategically important for growing and improving. Lastly, the company needs to determine if it’s even achievable or is it just a pie-in-the-sky dream. All of these considerations, some basic rules, and software are required for it all to work smoothly.

Before you begin, take time to determine what you want to achieve from your data strategy, and what questions you want answered with data. Ask yourself, what form are the answers to those questions going to take. From there, you should be able to work out what sources the answers can possibly come from, and how you are going to get that data. Finally, you can consider how you will process, manipulate, enhance, and enrich the data in order to give you the information you need to fulfill your use case.

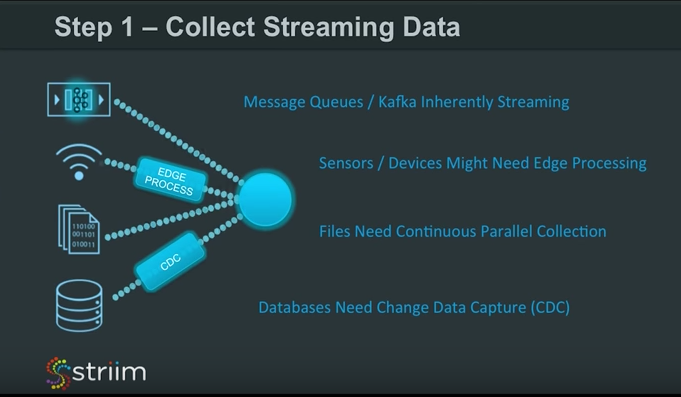

Step 1 – Collect Streaming Data

Once you finalize the business side from step 0 and get the technology set up, it’s time to think about streaming data. You can’t think about streaming data after the fact. You can’t do batch processes, then stream data. You can, however, stream data and then do batches.

Data collection can come from various sources: message queues, sensors, log files, Change Data Capture (CDC), etc. With sensors, you can’t possibly process and store all of the data being generated in a central location. You must do edge processing in this instance. With log files, they come in batches and you can wait hours, meaning the data is outdated. You must stream all the log data in real-time and in parallel across all of your machines.

Lastly, with databases, they aren’t inherently streaming and you can’t use SQL to get data out of production databases. DBAs won’t allow you to run massive table scans, so you have to do Change Data Capture. CDC is approved and recommended by most DBAs as a way of getting enterprise data out of production databases. They use it all the time, behind the scenes, for creating database replicas or delivering data into an operational data store (ODS). The same is true for getting data from your databases into a duplicate Hadoop, Kafka or Cloud environment. You must utilize CDC for this.

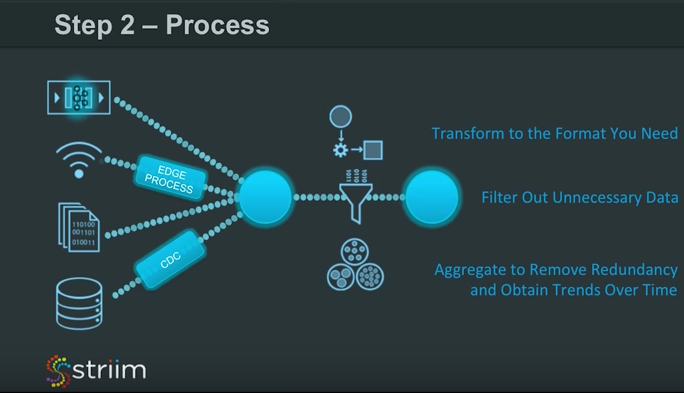

Step 2 – Process

Once your data is streaming, how do you shape it into the right form in order to answer the questions you had from step 0?

The first thing you can do is filter out any unnecessary data. You can manipulate that data and transform it into a format that is easily queryable. This will depend on your use case, where you are landing the data, and what formats you need for queries.

You may need to aggregate data or remove redundancy. This is where viewing trends over time is valuable. IoT data and devices can send you lots of similar data all the time. An example is a Nest thermostat sending the same temperature every second for an hour. That’s 3,600 data points with only one piece of information. You don’t need all that data which is where edge processing can help.

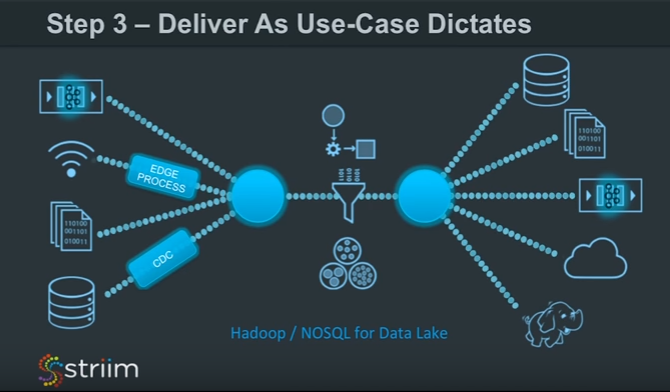

Step 3 – Deliver As Use-Case Dictates

Next is delivering data which really depends on your use case. You may need to deliver into a database or file system. Many people move onto Kafka and utilize it as an enterprise data bus. Maybe you are delivering into the Cloud and you need elastic storage and scalability. Or, maybe you are delivering into a data lake.

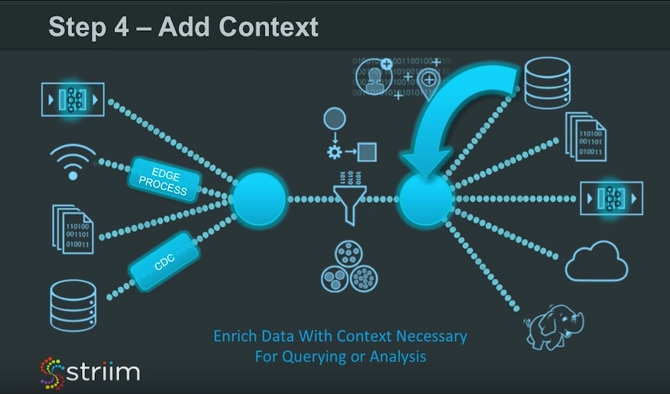

Step 4 – Add Context

Oftentimes, data that’s processed and delivered in step #3 doesn’t contain enough context to make decisions. This is where it is important to enrich the data to make it more queryable.

An example of this is with a normalized database with tables that you are trying to query. Imagine using Change Data Capture and putting all of that into Hadoop. What you’re going to get is a lot of IDs and foreign keys. If those land in your data lake, there’s not much value in querying IDs, and you can’t go back and ask important questions. You would have to create big enormous queries.

Instead, as your data is moving through your data streams, you enrich it with external data to give it the context that you need. That’s the only way you can start answering some of these questions you started with.

It’s really important to think about streaming as part of your data strategy. If you start with batch, you will not be able to move to real-time queries later.

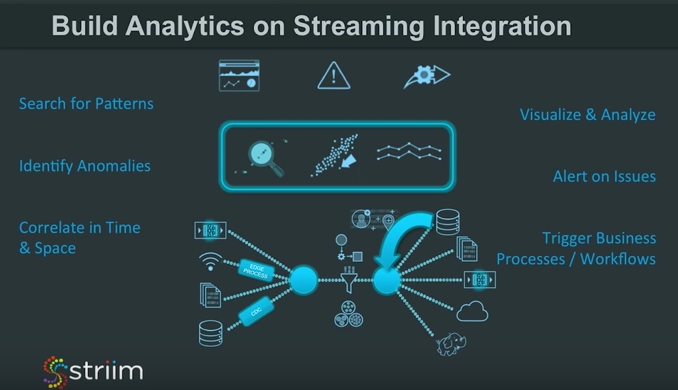

Lastly, it’s recommended to build analytics into the process. You can search for patterns, find anomalies, correlate time and space patterns, alert on issues, visualize and analyze, and trigger business workflows.

For more information, please visit the following resources:

Change Data Capture – capturing change in production databases for analysis and visualization

Replication Monitoring – providing visibility into replication lag and in-flight transactions

SLA Monitoring – proactively act before infringements occuroactively react before infringements happenproactively react before infringements happen

Security & Risk – leveraging streaming data to prevent fraud an minimize exposure

IoT Monitoring – real-time analysis of sensor data in context

You may also wish to take a look at the Striim Overview data sheet, request a demo with one of our lead technologists, or download the Striim platform.