Stream data from Salesforce and Oracle to Azure Synapse

Benefits

Analyze real-time operational, and transactional data from Salesforce and Oracle in Synapse.

Leverage Synapse for instant scalability and accelerate Analytics and reporting

Use the power of Real-Time Data Streaming to build Real-Time analytical and ML models with Synapse

The advent of the Digital age created the need for robust and faster analytics, an essential part of every organization’s growth and success. Analysts and business executives rely on real-time data to derive insights into their business decisions.

Most of the pre-existing data pipelines in the industry are ETL based which gets bottlenecked with the Data transformation operations like aggregating, joining and other compute activities given the amount of data that is being generated or consumed. Data transformation is usually time-consuming and complex which has only increased with more streaming data sources that have come into play.

Why Salesforce?

Among the many data sources widely used, Salesforce has become one of the pivotal tools as part of any business process management by uniting the sales, service, marketing, and IT teams within a single shared view of customer information.

Why Striim with Salesforce?

Striim makes it easy to migrate data from Salesforce in minutes. After your data migration is complete, Striim can continuously sync Salesforce and a wide array of data sinks or targets with real-time data integration using change data capture.

This allows you to build real-time analytics and machine learning capabilities alongside Operational systems with minimal impact.

Why is Azure Synapse Analytics considered a powerhouse among data warehouses?

Synapse sets itself apart by providing key features such as Azure Synapse Link which provides an end-to-end view of your business by easily connecting separate Microsoft data stores and automatically moving data without time-consuming extract, transform and load (ETL) processes. Synapse has an added advantage of integrations with Azure databricks, Spark, T-SQL, and Power BI all while using the same analytics service.

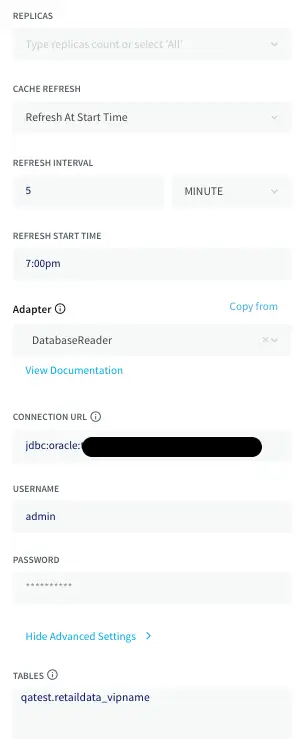

Optimizing pipelines through DB cache

In this recipe, we’ll explore how to create Salesforce and Oracle as a data sources and integrate them with Striim and Synapse. This recipe tutorial shows how retail order data in Salesforce is combined with sensitive PII data of customers stored in Oracle and loaded into Synapse for analytics. More often in production use cases implemented in real life, some of the data sets used to generate analytics are static and don’t require a constant refresh. Most data sets can be categorized into daily refresh or weekly refresh depending on the characteristics and frequency at which data is being generated. To optimize these kinds of pipelines, we are going to use Striim’s DB cache which loads the static/non-real-time historical or reference data acquired from Oracle into the cache. This cached data is typically used by queries to enrich real-time data. If the source is updated regularly, the cache can be set to refresh the data at an appropriate interval.

Oracle DB cache can be replaced with Oracle CDC as a source if the data source is more real-time oriented.

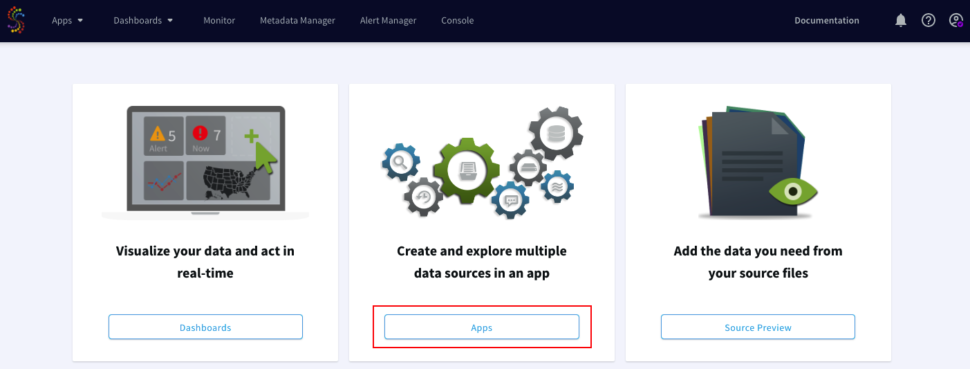

Feel free to sign up for a Free trial of Striim here

Step 1: Setting up Salesforce as a source

Login to your Salesforce and get the following connection parameters that are required for the Salesforce adapter in Striim.

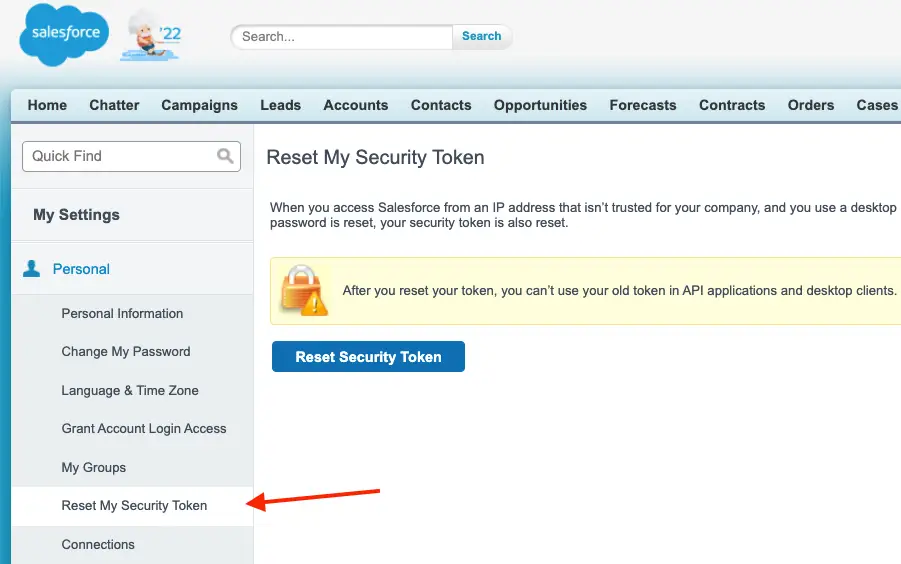

Firstly, We would need the security token for the salesforce account, which is usually generated during account sign-up and received via email. We can also reset the security token by navigating to the User menu → My Settings →Under Personal tab → Reset My Security token.

The sample data used in Salesforce connected app for this tutorial can be found in this github repository.

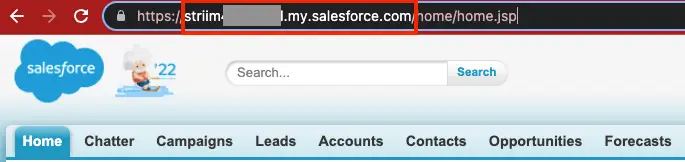

The easiest way to get the API endpoint is from the homepage after we log in to the salesforce profile.

We can also verify the API endpoint (instance_url) from the curl command which generates the Authentication token. Send a request to the Salesforce OAuth endpoint using this cURL template:

curl https://.my.salesforce.com/services/oauth2/token -d 'grant_type=password' -d 'client_id=' -d 'client_secret=' -d 'username=<my-login@domain.com>' -d 'password='

Replace MyDomainName with the domain for your Salesforce org. Replace consumer-key and consumer-secret with the consumer key and consumer secret of your connected app. Replace

my-login@domain.com and my-password with your login credentials for your Salesforce org and also append the security token with the password. If the request was unsuccessful and you’re unable to log in, see Troubleshoot Login Issues in Salesforce Help.

If the request was successful, the response contains an access token that you can use to access your data in Salesforce.

For example:

{"access_token":"00D5e000001N20Q!ASAAQEDBeG8bOwPu8NWGsvFwWNfqHOp5ZcjMpFsU6yEMxTKdBuRXNzSZ8xGVyAiY8xoy1KYkaadzRlA2F5Zd3JXqLVitOdNS", "instance_url":"https://MyDomainName.my.salesforce.com", "id":"https://login.salesforce.com/id/00D5e000001N20QEAS/0055e000003E8ooAAC", "token_type":"Bearer", "issued_at":"1627237872637", "signature":"jmaZOgQyqUxFKAesVPsqVfAWxI62O+aH/mJhDrc8KvQ="}

For more information regarding authorizing apps with Oath, refer to this Salesforce Help page or Api rest developer docs.

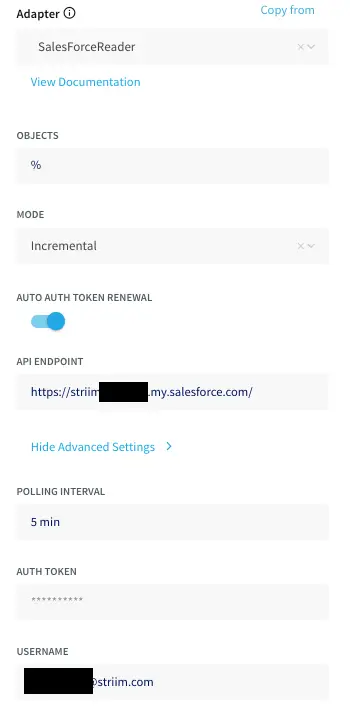

Step 2: Configure the Salesforce Adapter in Striim

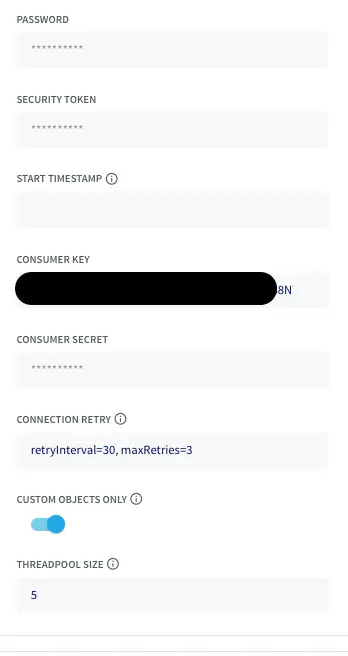

Enter the values and connection parameters derived from Step 1 of the Salesforce setup. The Consumer key and consumer secret are the client id and client secret from the curl command used above to generate the Auth token.

Note: Enable Auto auth token renewal under the settings. If not enabled, the app would crash since the curl command needs to be rerun manually to generate a new auth token.

Step 3: Configure the query to pull Salesforce records

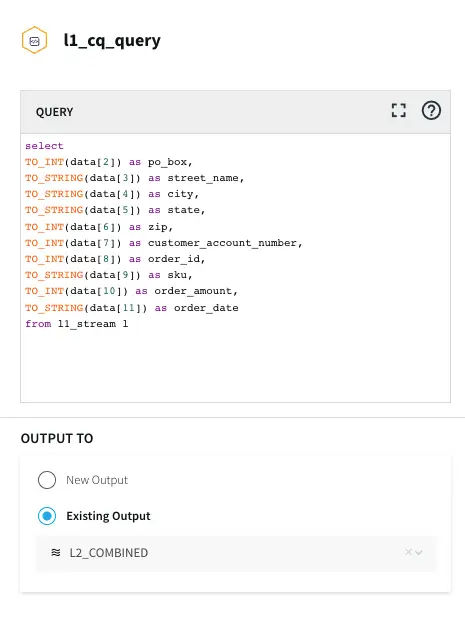

Next, we search for Stream base component from the drop-down menu and configure the fields/columns for the next CQ processor.

Create a new Type , for example l2_type and add the fields or column names from the salesforce connected app and configure the datatype respectively.

For more information on Continous Query refer to the following documentation and for using Multiple CQs for complex criteria.

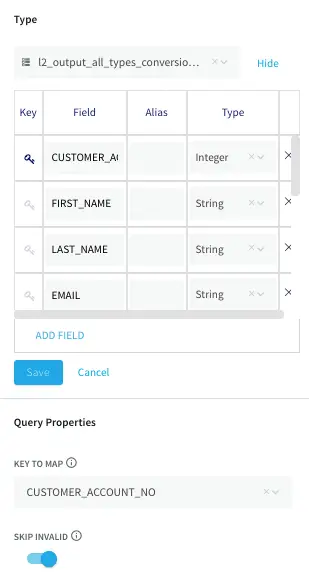

Step 4: Configure the Oracle Cache

Create a new type and add the respective field and datatypes for the fields.

The Oracle sample in this tutorial stores the sample data for customers’ sensitive information and can be found in this github repository.

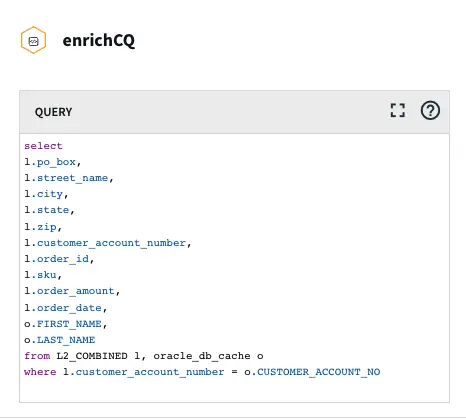

Step 5: Configure the Query to pull Salesforce and Oracle data in real-time

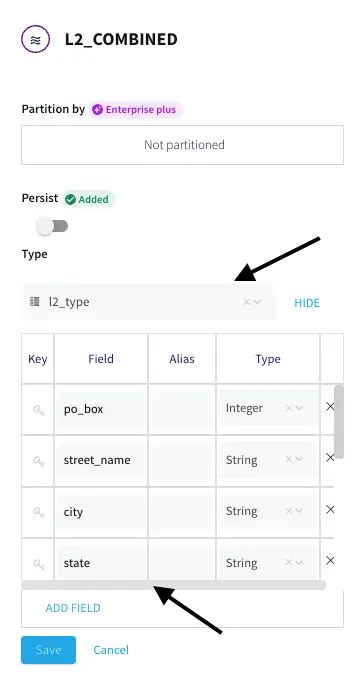

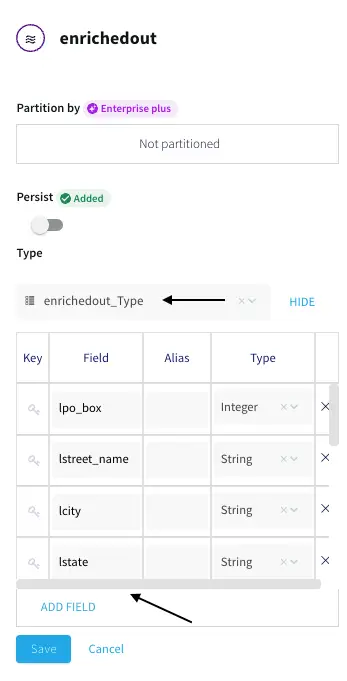

Create a new Stream from the Base components and create a new type that contains the enriched fields from oracle and salesforce and click Save.

Step 6: Configure the Synapse Target

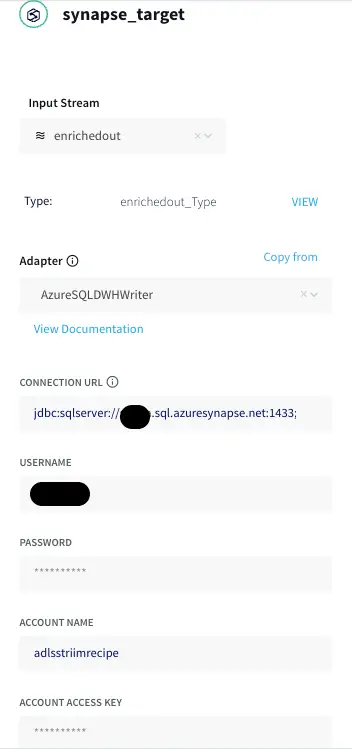

Search for the Synapse Target component and select the input stream of the above CQ component.

For Synapse, The connection URL needs to be in the following format:

jdbc:sqlserver://<synapse-workspace-name>.sql.azuresynapse.net:1433;database=<dedicated-pool-name>Note: If you would like to use a serverless SQL pool the URL should look like this:

<Azure Synapse workspace name>-ondemand.sql.azuresynapse.net

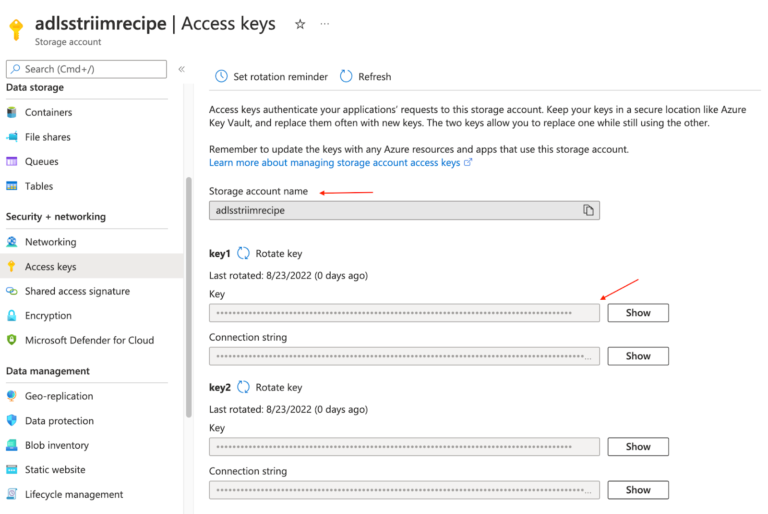

Enter the account name and Account access key for the ADLS storage used by synapse.

Make sure the table already exists in Synapse and enter the target table name. The query to create the target table is available in the github repo

Set the Storage access driver type to ABFSS since the dedicated Synapse pool is using ADLS.

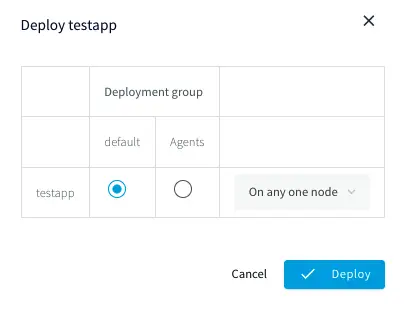

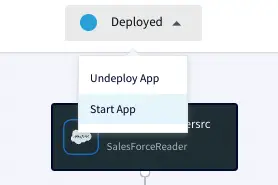

Once the app is configured, Click on the Deploy app from the top menu and Select Start app

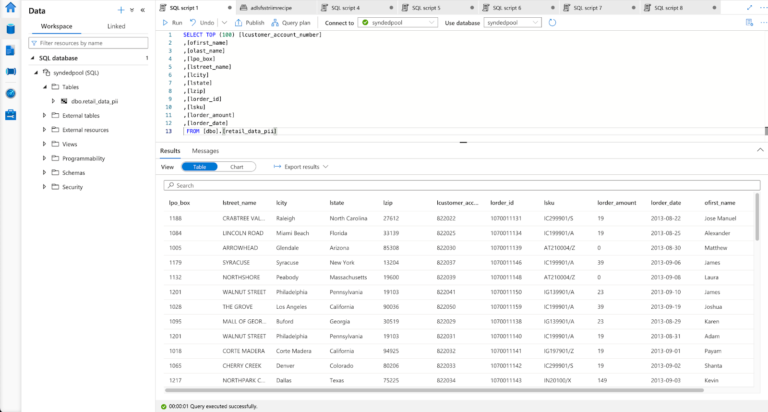

The data in synapse can be queried using Synapse studio or through Azure data studio.

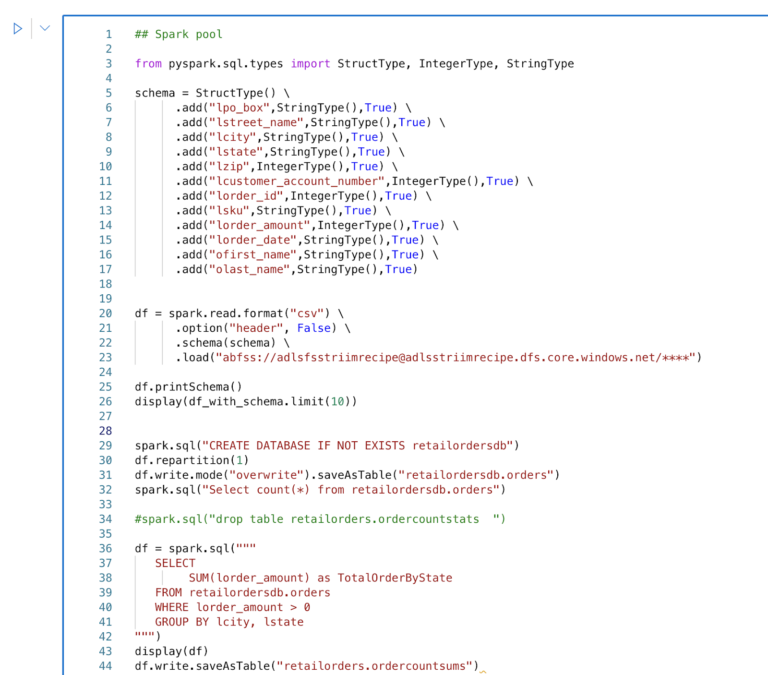

Step 7: (Optional) Performing Spark analysis through in-built Synapse Spark pool

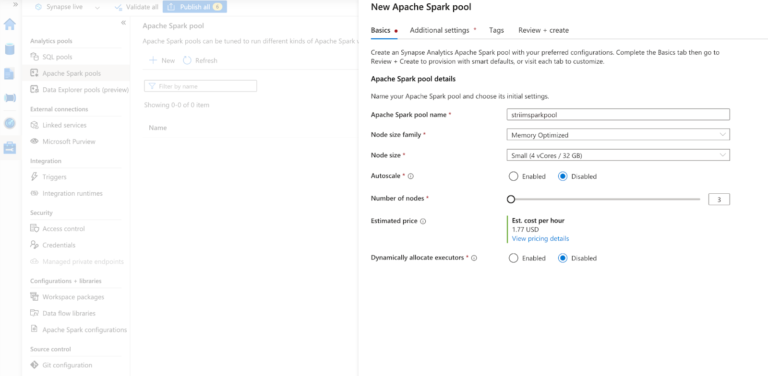

Create a new spark pool with the configuration of your choice. For this tutorial, we can choose one with the minimum requirements.

Once the spark cool is available, we can perform spark analytics on the data through the synapse itself.

Wrapping up: Start your free trial

Create a new spark pool with the configuration of your choice. For this tutorial, we can choose one with the minimum requirements.

The tutorial showed how data from multiple sources like Salesforce and Oracle can be combined using the Striim app and integrated with Synapse Datawarehouse. By Constantly moving real-time data into Synapse, we can build analytics or Machine learning models through spark within Synapse with minimal impacts on current systems with unparalleled performance.

Striim’s pipelines are portable between multiple clouds across hundreds of endpoint connectors including MongoDB, and Azure Cosmos, and also support other data warehouses including Google BigQuery, Snowflake, and Amazon Redshift.

As always, feel free to reach out to our integration experts to schedule a demo, or try Striim for free here.

Tools you need

Striim

Striim’s unified data integration and streaming platform connects clouds, data and applications.

Oracle

Oracle is a multi-model relational database management system.

Salesforce

Salesforce is a popular CRM tool for support, sales, and marketing teams worldwide

Azure Synapse

Azure Synapse Analytics is a limitless analytics service that brings together data integration, enterprise data warehousing, and big data analytics

Use cases

Integrating Striim’s CDC capabilities with Salesforce makes it very easy to rapidly expand the capabilities of a CRM data with just a few clicks.

Striim’s additional components allow not only to capture real-time data, but also apply transformations on the fly before it even lands in the staging zone, thereby reducing the amount of data cleansing that is required.

The wide array of Striim’s event transformers makes it as seamless as possible with handling any type of sensitive data allowing users to maintain compliance norms on various levels.

Allow high-quality data into Synapse which can then be transformed via Spark code and integrate with Power BI or Tablueau for Visualizations.