In this post, we take a look at change data capture (CDC) as a solution for the cache consistency problem. As a visual aid, included below is a brief video demo that will run you through how to push changes from a database to the cache in real time.

Imagine that you have an application that works by retrieving and storing information in a database. To get faster response times, you may utilize an in-memory cache for rapid access to data. However, other applications also make database updates, which leads to a cache consistency problem, and the application now shows out of date or invalid information.

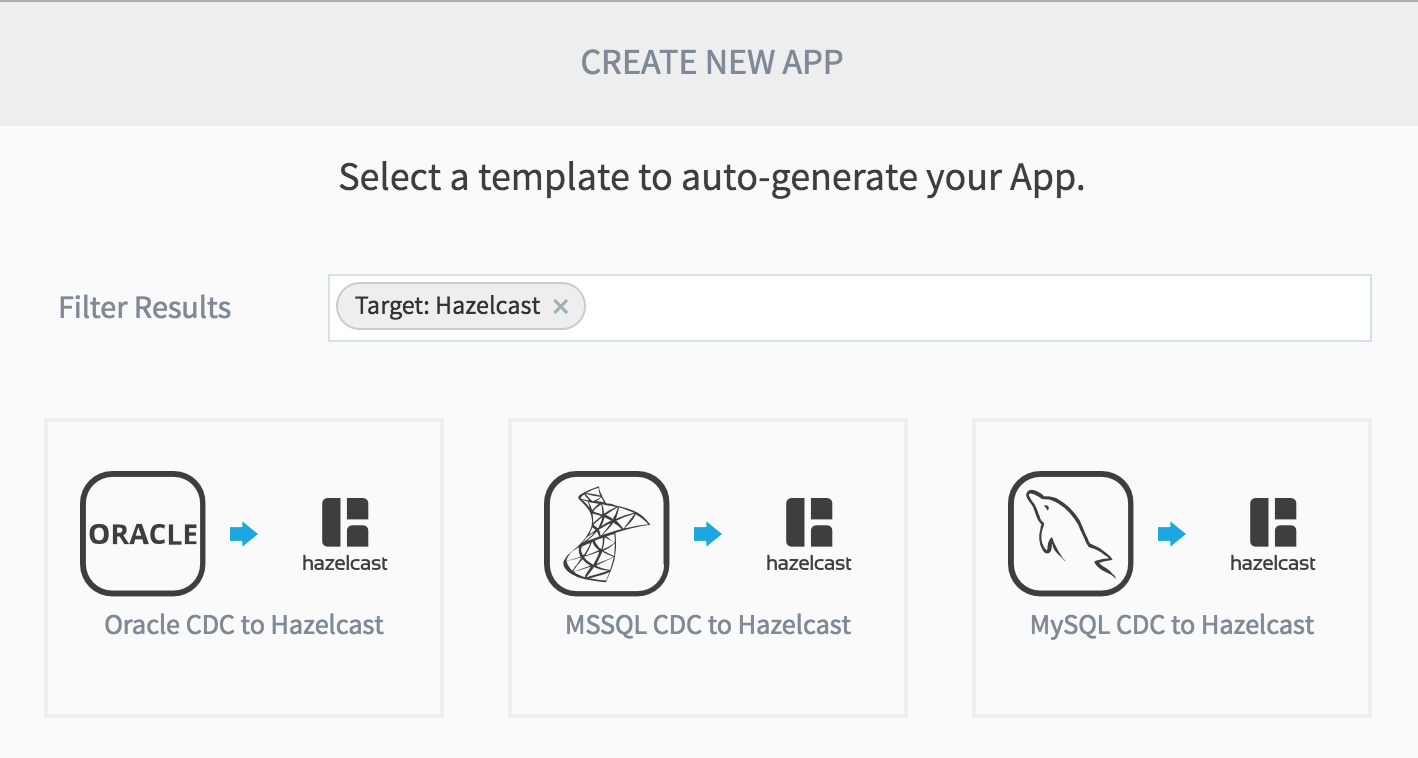

Hazelcast Striim Hot Cache

Hazelcast Striim Hot Cache easily solves issues with cache consistency by using streaming change data capture to synchronize the cache with the database in real time, ensuring the cache and associated application always have the correct data. In the demo video, we have a MySQL database, Hazelcast Cache, and Striim server. We’re using test programs to work with the MySQL database to create, modify, and dump data, and for the Hazelcast cache which can also dump data.

We start by creating a table using the test code and loading it with data. We then use Striim to load the data from the database into the Hazelcast cache. Next, we create a CDC flow from the database and use this to deliver live changes into the cache. We run continuous modifications against a database which are replicated to the cache.

After some time, we dump the database and the cache to files and run a diff to prove that they are the same. In the demo, you’ll see the use of wizards to simplify the CDC setup and delivery to Hazelcast, real-time CDC from the database, real time delivery of change to synchronize the cache, and a real-time custom monitoring of the whole solution.

The first thing we need to do is set up the demo by using our test program to create a table in the MySQL database. Next, we use the test code to insert 200,000 rows of data. To perform the initial load from database to cache, we need a data flow. We use a database reader to extract data from MySQL, which we configure for MySQL instance and test table.

The target is a Hazelcast writer, which is configured to map the table data into our cache. To load the cache, we need to deploy it and start the application, which streams the table data from the database into the cache. The cache now has 200,000 entries. For Hot Cache, we need to set up change data capture to stream live database data to the cache.

First, we can figure properties to connect to the MySQL database, and Striim checks to make sure CDC will work properly. We will tell you if the configuration needs changing and what to do. You can then browse and select tables of interest.

Next, you enter the Hazelcast cluster information and mapping information in the form of a file linking database tables to cache objects. The last step finalizes the configuration of the Hazelcast target. The wizard results in a data flow from the MySQL CDC source to the Hazelcast target.

When deployed and started, the cache is synced with modifications from database change. We run some modifications in the form of inserts, updates, and deletes against the table. We see the table size, which we compare with the number of entries in the cache. Both the table and cache have 180,551 records.

If we dump the MySQL data from the database into a file and do the same with the cache data into a different file, we can do a diff between the two files with no results, proving that they are the same. In both cases, the Striim can also monitor the data flow using a separate data flow for analytics and fully customizable dashboards to meet your business requirements.

Cache Consistency

Are you having issues with cache consistency? Download Striim for Hazelcast to try it out yourself, or chat with a Striim technologist by scheduling a demo to learn more.