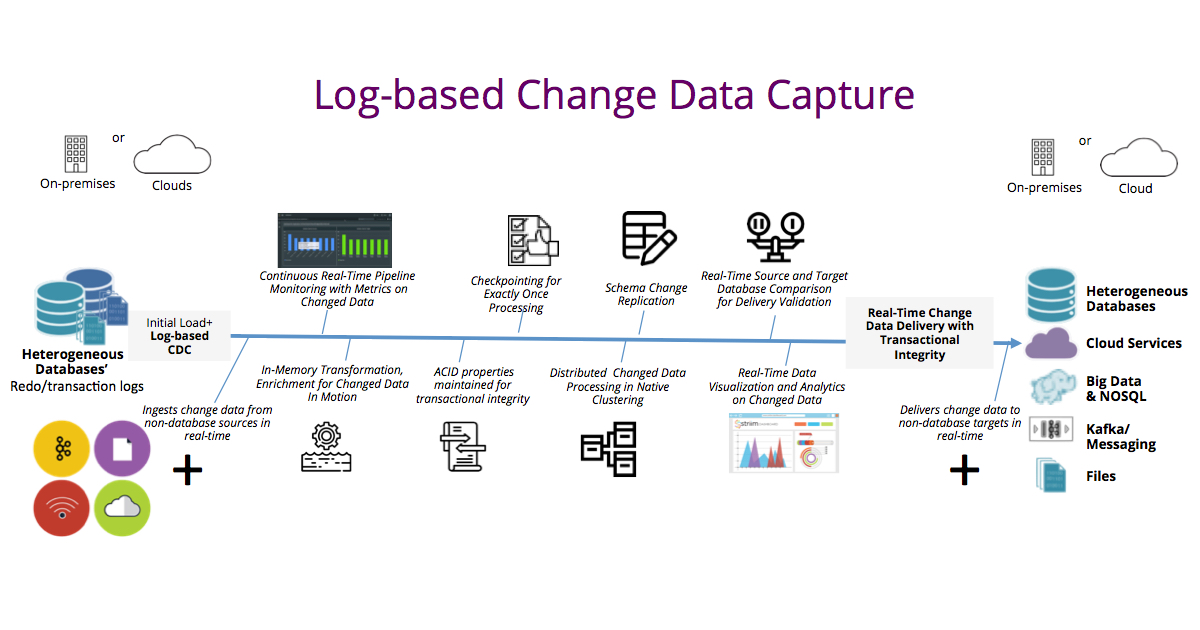

Change data capture, and in particular log-based change data capture, has become popular in the last two decades as organizations have discovered that sharing real-time transactional data from OLTP databases enables a wide variety of use-cases. The fast adoption of cloud solutions requires building real-time data pipelines from in-house databases, in order to ensure the cloud systems are continually up to date. Turning enterprise databases into a streaming source, without the constraints of batch windows, lays the foundation for today’s modern data architectures. In this blog post, I would like to discuss Striim’s CDC capabilities along with its unique features that enhance the change data capture, as well as its processing and delivery across a wide range of sources and targets.

Log-Based Change Data Capture

Log-Based Change Data Capture

In our blog post about Change Data Capture, we explained why log-based change data capture is a better method to identify and capture change data. Striim uses the log-based CDC technique for the same reasons we stated in that post: Log-based CDC minimizes the overhead on the source systems, reducing the chances of performance degradation. In addition, it is non-intrusive. It does not require changes to the application, such as adding triggers to tables would do. It is a light-weight but also a highly-performant way to ingest change data. While Striim reads DML operations (INSERTS, UPDATES, DELETES) from the database logs, these systems continue to run with high-performance for their end users.

Striim’s strengths for real-time CDC are not limited to the ingestion point. Here are a few capabilities of the Striim platform that build on its real-time, log-based change data capture in enabling robust, end-to-end streaming data integration solutions:

Log-based CDC from heterogeneous databases for non-intrusive, low-impact real-time data ingestion

Striim uses log-based change data capture when ingesting from major enterprise databases including Oracle, HPE NonStop, MySQL, PostgreSQL, MongoDB, among others. It minimizes CPU overhead on sources, does not require application changes, and substantial management overhead to maintain the solution.

Ingestion from multiple, concurrent data sources to combine database transactions with semi-structured and unstructured data

Striim’s real-time data ingestion is not limited to databases and the CDC method. With Striim you can merge real-time transactional data from OLTP systems with real-time log data (i.e., machine data), messaging systems’ events, sensor data, NoSQL, and Hadoop data to obtain rich, comprehensive, and reliable information about your business.

End-to-end change data integration

Striim is designed from the ground-up to ingest, process, secure, scale, monitor, and deliver change data across a diverse set of sources and targets in real time. It does so by offering several robust capabilities out of the box:

- Transaction integrity: When ingesting the change data from database logs, Striim moves committed transactions with the transactional context (i.e., ACID properties) maintained. Throughout the whole data movement, processing, and delivery steps, this transactional context is preserved so that users can create reliable replica databases, such as in the case of cloud bursting.

- In-flight change data processing: Striim offers out-of-the-box transformers, and in-memory stream processing capabilities to filter, aggregate, mask, transform, and enrich change data while it is in motion. Using SQL-based continuous queries, Striim immediately turns change data into a consumable format for end users, without losing transactional context.

- Built-in checkpointing for reliability: As the data moves and gets processed through the in-memory components of the Striim platform, every operation is recorded and tracked by the solution. If there is an outage, Striim can replay the transactions from where it was left off — without missing data or having duplicates.

- Distributed processing in a clustered environment: Striim comes with a clustered environment for scalability and high availability. Without much effort, and using inexpensive hardware, you can scale out for very high data volumes with failover and recoverability assurances. With Striim, you don’t need to build your own clusters with third-party products.

- Continuous monitoring of change data streams: Striim continuously tracks change data capture, movement, processing, and delivery processes, as well as the end-to-end integration solution via real-time dashboards. With Striim’s transparent pipelines, you have a clear view into the health of your integration solutions.

- Schema change replication: When source Oracle database schema is modified and a DDL statement is created, Striim applies the schema change to the target system without pausing the processes.

- Data delivery validation. For database sources and targets, Striim offers out-of-the-box data delivery verification. The platform continuously compares the source and target systems, as the data is moving, validating that the databases are consistent and all changed data has been applied to the target. In use cases, where data loss must be avoided, such as migration to a new cloud data store, this feature immensely minimizes migration risks.

- Concurrent, real-time delivery to a wide range of targets: With the same software, Striim can deliver change data in real time not only to on-premise databases but also to databases running in the cloud, cloud services, messaging systems, files, IoT solutions, Hadoop and NoSQL environments. Striim’s integration applications can have multiple targets with concurrent real-time data delivery.

- Pre-packaged applications for initial load and CDC: Striim comes with example integration applications that include initial load and CDC for PostgreSQL environments. These integration applications enable setting up data pipelines in seconds, and serve as a template for other CDC sources as well.

Turning Change Data to Time-Sensitive Insights

In addition to building real-time integration solutions for change data, Striim can perform streaming analytics with flexible time windows allowing you to gain immediate insights from your data in motion. For example, if you are moving financial transactions using Striim, you can build real-time dashboards that alert on potential fraud cases before Striim delivers the data to your analytics solution.

Log-based change data capture is the modern way to turn databases into streaming data sources. However, ingesting the change data is only the first of many concerns that integration solutions should address. You can learn more about Striim’s CDC offering by scheduling a demo with a Striim technologist or experience its enterprise-grade streaming integration solution first-hand by downloading a free trial.