Not all data pipelines are created equal. Brittle, rigid, and too slow for the pace of modern business—too many data pipelines are holding back organizations from delivering impact with their data.

Yet data pipelines, vital pieces in the data infrastructure puzzle, are the backbone of the data-driven enterprise. It’s time to take them seriously, and consider the best ways to design and build data pipelines for visibility, speed, reliability, and scale.

This article will aim to provide a clear, practical guide to modern data pipelines. We’ll explore what they are, why they matter, and how they function in the real world—from real-time analytics and enabling seamless cloud migrations to powering advanced data applications.

We’ll break down the essential components, compare different architectural approaches, and offer a step-by-step guide to building pipelines that deliver true business value.

What are Data Pipelines?

At their core, data pipelines automate the movement of data from various sources to a destination. But smart data pipelines do far more than just move data; they are sophisticated workflows that include crucial steps for in-flight data transformation, quality validation, and data governance. You could think of them as the circulatory system for your organization’s datasets, ensuring that information flows efficiently and reliably to the apps and visualization tools where it’s needed most.

Dmitriy Rudakov, Director of Solutions Architecture at Striim, describes a data pipeline as “a program that moves data from source to destination and provides transformations when data is inflight.”

These pipelines are the foundation of any modern data strategy. They are the essential infrastructure that enables everything from the real-time analytics to the complex data synchronization required for a successful cloud migration. Without robust, well-designed data pipelines, data remains trapped in silos, insights grow stale, and business opportunities are missed.

Real-World Use Cases for Data Pipelines

Data pipelines are no longer confined to a data engineer’s to-do list. Today, they are the engines behind business value, powering everything from hyper-personalized customer experiences to real-time decision making. Let’s explore a few ways they create impact.

Real-Time Analytics and Business Intelligence

Yesterday’s data is no longer sufficient for modern businesses. They need a continuous stream of fresh data to inform their decisions, drive dynamic customer segmentation, and deliver up-to-the-minute operational KPIs. This is where batch pipelines fall short — unable to deliver the low-latency, always-on data streams that businesses now demand. A streaming platform like Striim enables this by using real-time Change Data Capture (CDC) to feed enriched, transaction-level data into cloud warehouses like Snowflake or BigQuery the moment it’s created.

Cloud Migration

Data pipelines are essential for any cloud modernization strategy. They act as the bridge, synchronizing on-premises databases with cloud systems to ensure consistency during a migration. The key is to achieve this without disrupting business operations. Zero-downtime replication and the ability to handle schema evolution are crucial—areas where Striim excels. By delivering real-time change data and managing schema changes continuously, Striim ensures that legacy and modern systems remain perfectly in sync, de-risking complex migration projects.

IoT, Edge, and Operational Alerting

From sensor data in a manufacturing plant to telemetry from a fleet of vehicles, modern businesses are inundated with high-volume event data. Data pipelines are crucial for turning this firehose of information into actionable insights. For these use cases, performance and the ability to filter data at the source are paramount. Striim’s in-flight processing capabilities allow teams to filter, aggregate, and transform data streams on the edge or in the cloud, reducing noise and surfacing key events for real-time alerting. With sub-second latency and the power of custom transformations, businesses can react to operational issues instantly, not hours later when the data is already stale.

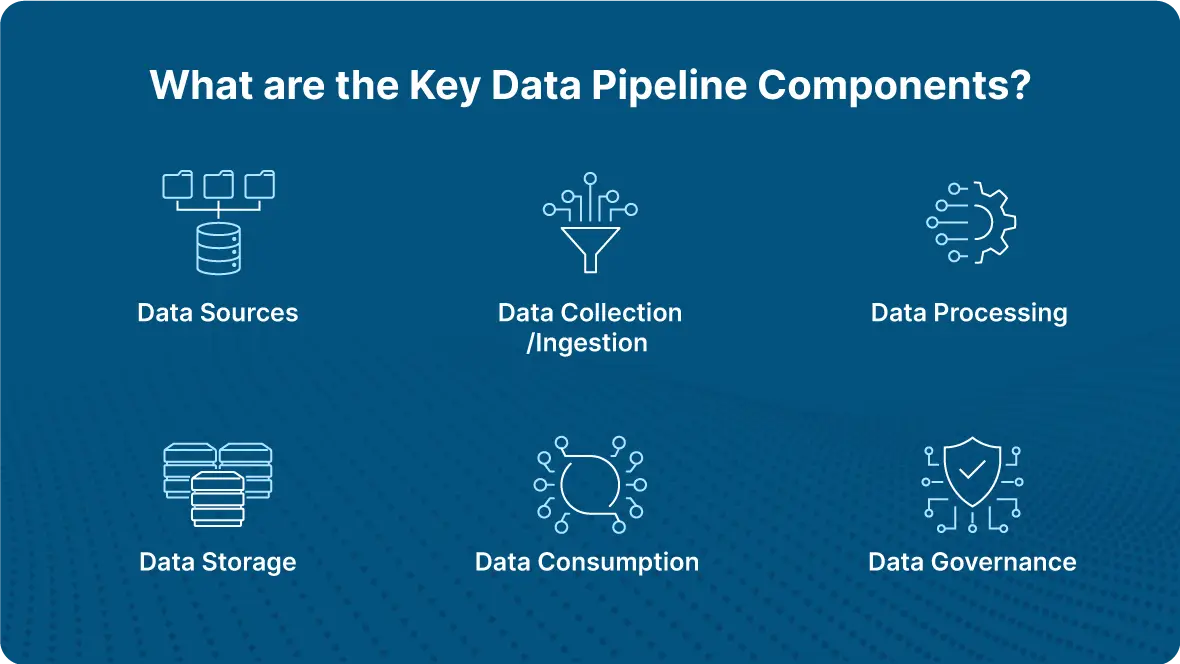

Key Components of a Data Pipeline

To design and build a data pipeline, you need to understand its essential building blocks. Each component plays a vital role in ensuring data flows securely and reliably from source to destination. Understanding these stages helps you identify where your current data pipeline architecture might be falling short or where you can introduce modern approaches to improve performance.

Data Sources

This is where your data comes from. Sources can be incredibly diverse and include structured data from relational databases (like Oracle or PostgreSQL), semi-structured data from APIs and webhooks, or unstructured data from application logs, IoT sensors, and clickstreams.

Data Collection and Ingestion

This is the process of collecting raw data from its source. The method depends on the source and the desired freshness of the data. It can range from scheduled batch jobs that pull data periodically to real-time streaming ingestion that captures events as they happen using techniques like Change Data Capture (CDC).

Data Processing

New data rarely arrives in a clean, usable format. But that’s where data processing comes in: ensuring data is cleaned, transformed, enriched with other data sources, filtered to remove noise, or masked to protect sensitive information. This can involve simple changes or complex joins and aggregations to get data into the shape and format its needs to be to deliver value.

Data Storage

This is the destination where processed data is stored for analysis and consumption. Common destinations include cloud data warehouses like Snowflake, Amazon Redshift, or Google BigQuery; data lakes like Amazon S3 or Azure Data Lake Storage; or real-time messaging platforms like Apache Kafka that feed downstream applications.

Data Consumption

This is the final stage where different business units get value from their data. Downstream applications and users like data scientists consume the processed data for their individual purposes, such as powering BI dashboards (e.g., Tableau, Power BI), training machine learning models, or feeding operational business applications.

Data Governance

Governance isn’t a single stage but a continuous process that overlays the entire pipeline. It includes managing access controls, ensuring high data quality, handling schema management to prevent breakages, and maintaining detailed audit logs for compliance and security purposes.

Types of Data Pipelines

Data pipelines will look and operate differently depending on the organization and their intended outcome. The architectural approach—how and when data moves and is transformed—has a direct impact on the quality of your insights, operational costs, and the end-user experience.

Batch Pipelines

Batch pipelines are the traditional workhorses of the data world. They operate on a schedule, collecting and moving large volumes of data at set intervals (e.g., hourly or daily). While batch processing is reliable for certain tasks like end-of-day reporting, their inherent latency makes them unsuitable for use cases that require immediate action. By the time the data arrives, the opportunity may have already passed.

Streaming Pipelines

In contrast, streaming pipelines are event-driven, processing data continuously as it is generated. These real-time systems are designed for low-latency, high-throughput scenarios. This is Striim’s core strength, combining log-based Change Data Capture (CDC) with a powerful streaming ETL engine to deliver and process data in milliseconds. This approach is ideal for fraud detection, real-time analytics, and operational monitoring where speed is essential.

Lambda and Kappa Architectures

To get the benefits of both batch and streaming, some organizations adopt more complex architectures. The Lambda architecture uses two separate paths for data—a slow batch layer for comprehensive analysis and a fast streaming layer for real-time views. The Kappa architecture attempts to simplify this by handling all processing with a single streaming engine. However, both can be complicated to build and maintain. Striim simplifies this challenge by offering a unified, streaming-first platform that can handle both real-time and historical data processing without the overhead of managing multiple, complex codebases.

ETL vs. ELT vs. Streaming ETL

The debate between ETL (Extract, Transform, and Load) and ELT (Extract, Load, Transform) comes down to when you process your data. Traditional ETL pipelines transform data before loading it into a warehouse. Modern ELT loads raw data first and uses the power of the cloud warehouse to perform transformations. Striim champions a third model: Streaming ETL. By processing and transforming data in-flight, before it ever lands in storage, businesses can dramatically reduce time-to-insight and minimize storage costs, as only clean, relevant data is loaded.

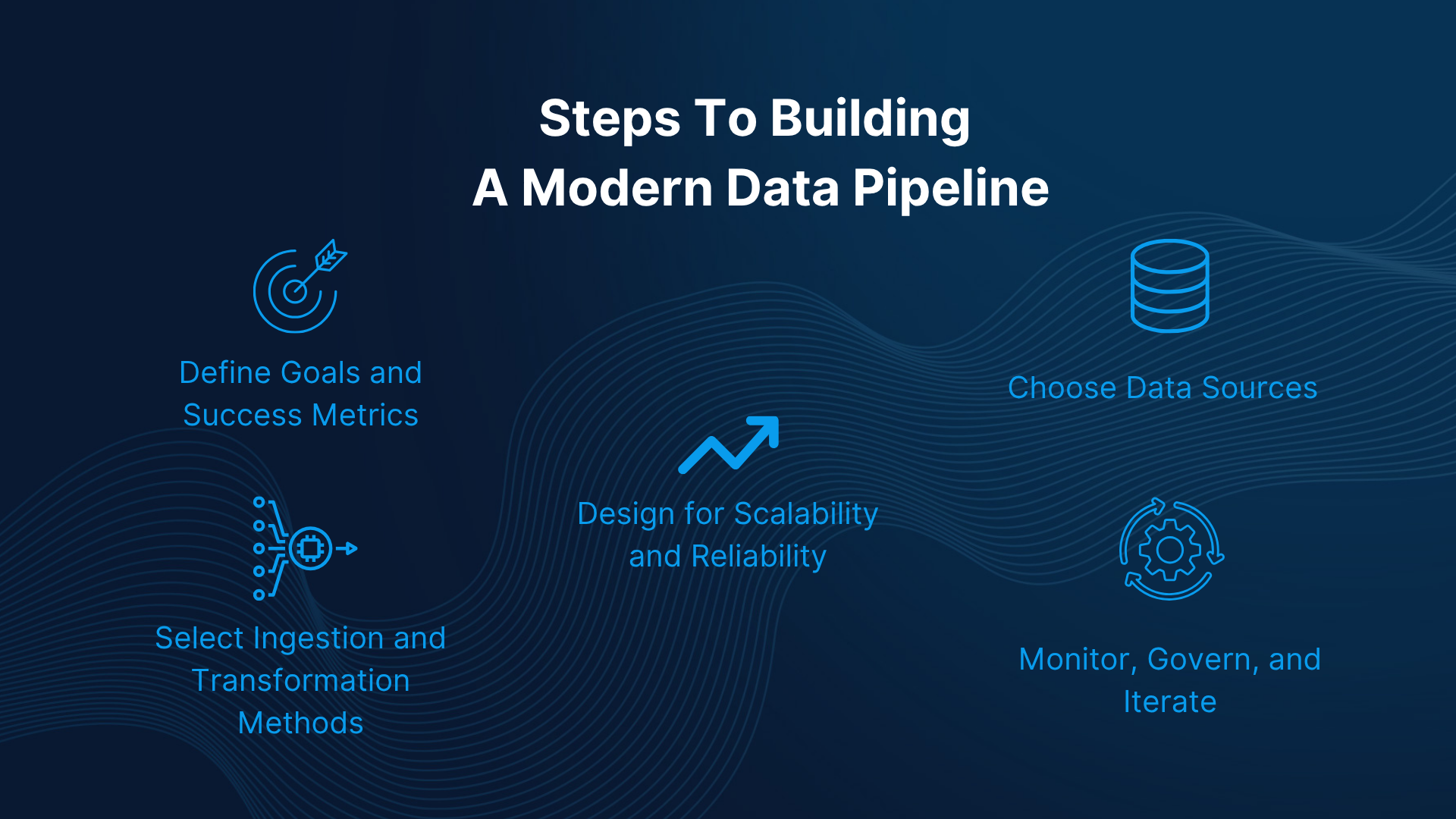

How to Build a Data Pipeline

Designing a robust data pipeline is about more than just connecting tools; it requires a strategic approach that starts with clear objectives and ends with a scalable, reliable infrastructure. Building a modern pipeline that delivers real value—not just data movement—involves a series of deliberate steps.

Define Goals and Success Metrics

Start with the business need. What problem are you trying to solve? Are you aiming to power real-time analytics, enable an operational sync between two systems, or meet a new compliance requirement? Your goals will directly shape every subsequent decision, from which data sources you select to how you process and deliver the final output.

Choose Data Sources

Next, identify the necessary data sources. You’ll need to consider the data’s format (structured, unstructured), its frequency (batch, streaming), and its compatibility with existing systems. This is where a platform with a wide array of connectors becomes invaluable. For example, a common goal is to feed real-time operational data into a cloud warehouse, which would require a pipeline that uses CDC to capture changes from a source like Oracle and deliver them to a target like Snowflake.

Select Ingestion and Transformation Methods

How will you get data out of your sources and what will you do with it? Compare different ingestion options like API polling, file-based transfers, or log-based CDC. Your choice will depend on your latency requirements and the source system’s capabilities. Similarly, decide whether you need to perform transformations in-flight (streaming ETL) to enrich or filter data, or if you will load it raw and transform it in the destination (ELT).

Design for Scalability and Reliability

A pipeline that works for a thousand events a day may fail at a million. Design your architecture with scalability and reliability in mind from day one. This means planning for failover, ensuring high throughput and low latency, and building robust error-handling and recovery mechanisms. Your pipeline must be resilient enough to handle unexpected spikes in data volume and recover gracefully from failures.

Monitor, Govern, and Iterate

A data pipeline is not a “set it and forget it” project. Implement comprehensive monitoring and observability from the very beginning. You need real-time visibility into the health of your pipeline through logs, alerts, and data lineage tracking. This allows you to proactively address issues, govern data access, and continuously iterate on your design to improve performance and meet new business requirements.

Common Challenges in Data Pipelines

Even the best-designed pipelines can encounter difficulties. Understanding the common pitfalls can help you build more resilient systems and choose the right tools to overcome them.

Latency and Data Freshness

Modern businesses demand real-time insights, but batch pipelines deliver stale data. This is one of the most common challenges, where the delay between an event happening and the data being available for analysis is too long. Striim solves this with log-based CDC, enabling continuous, sub-second data synchronization that keeps downstream analytics and applications perfectly current.

Poor Data Quality and Schema Drift

Poor data quality can corrupt analytics, break applications, and erode trust. A related challenge is schema drift, where changes in the source data structure (like a new column) cause downstream processes to fail. Striim addresses this head-on with in-pipeline data validation and schema evolution capabilities, which automatically detect and propagate source schema changes to the target, ensuring pipeline resilience.

Pipeline Complexity and Tool Sprawl

Many data teams are forced to stitch together a complex web of single-purpose tools for ingestion, transformation, and monitoring. This “tool sprawl” increases complexity, raises costs, and makes pipelines brittle and hard to manage. Striim unifies the entire pipeline into a single, integrated platform, reducing operational burden and simplifying the data stack.

Monitoring, Observability, and Alerting

When a pipeline fails, how quickly will you know? Without real-time visibility, troubleshooting becomes a painful, reactive exercise. Modern pipelines require built-in observability. Striim provides comprehensive health dashboards, detailed logs, and proactive alerting, giving teams the tools they need to monitor performance and recover from errors quickly.

Governance and Compliance

Meeting regulations like GDPR and HIPAA requires strict control over who can access data and how it’s handled. This is challenging in complex pipelines where data moves across multiple systems. Striim helps enforce governance with features to mask sensitive data in-flight, create detailed audit trails, and manage access controls, ensuring compliance is built into your data operations.

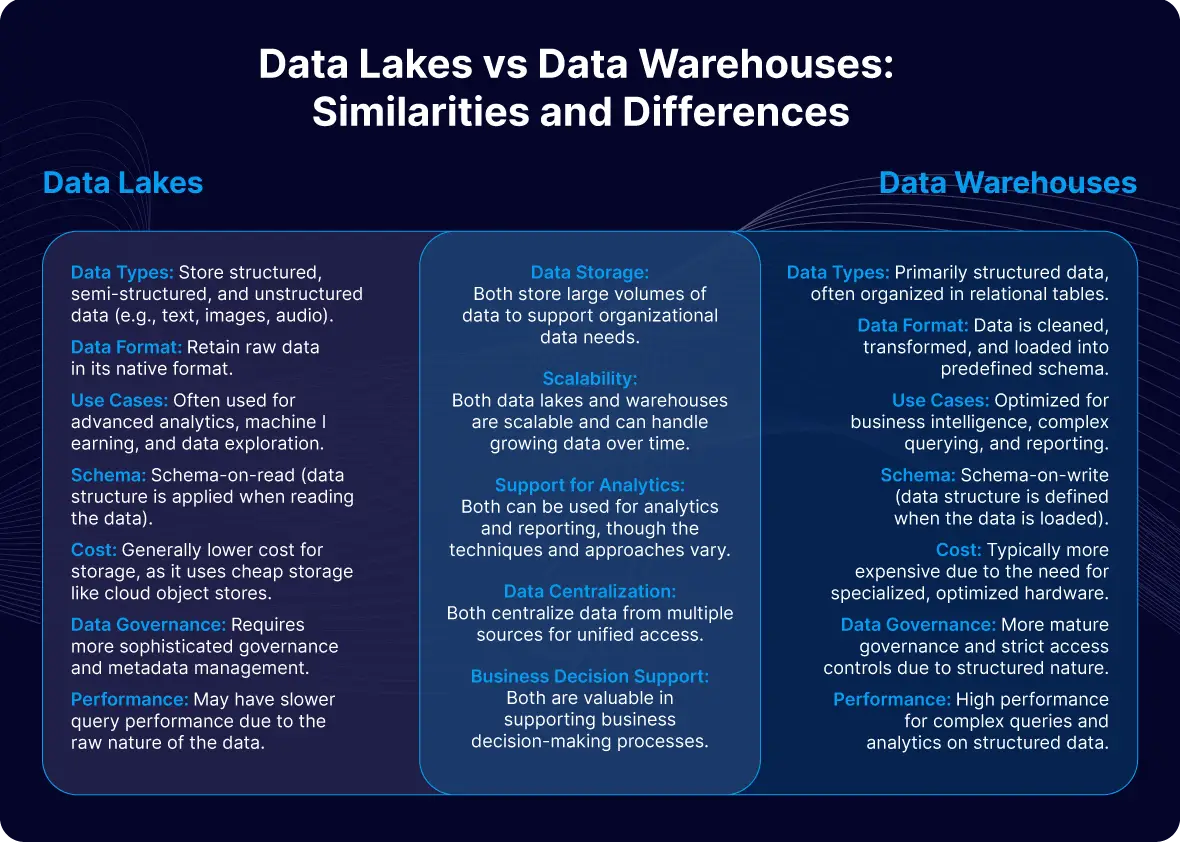

Data Lakes vs. Data Warehouses for Data Pipelines

Choosing where you store data is just as important as deciding how it gets there. The storage destination—typically a data lake or a data warehouse—will shape your pipeline’s design, cost, and capabilities. Understanding the differences is key to building an effective data architecture.

Differences in Storage Format and Schema

The fundamental difference lies in how they handle data structure. A data warehouse stores data in a highly structured, predefined format (schema-on-write). Data is cleaned and modeled before being loaded, making it optimized for fast, reliable business intelligence and reporting.

A data lake, by contrast, is a vast repository that stores raw data in its native format, structured or unstructured (schema-on-read). The structure is applied when the data is pulled for analysis, offering immense flexibility for data science, machine learning, and exploratory analytics where the questions aren’t yet known.

Choosing the Right Architecture for Your Pipeline

It’s not necessarily a binary choice between one or the other; many modern architectures use both.

- Use a data warehouse when your pipeline’s primary goal is to power standardized BI dashboards and reports with consistent, high-quality data.

- Use a data lake when you need to store massive volumes of diverse data for future, undefined use cases, or to train machine learning models that require access to raw, unprocessed information.

A unified platform like Striim supports this hybrid reality. You can build a single data pipeline that delivers raw, real-time data to a data lake for archival and exploration, while simultaneously delivering structured, transformed data to a data warehouse to power critical business analytics.

Choosing Tools and Tech to Power Your Data Pipelines

The data ecosystem is crowded. Every tool claims to be “real-time” or “modern,” but few offer true end-to-end data management capabilities. Navigating this landscape requires understanding the different categories of tools and where they fit.

Popular Open-Source and Cloud-Native Tools

The modern data stack is filled with powerful, specialized tools. Apache Kafka is the de facto standard for streaming data pipelines, but it requires significant expertise to manage. Airflow is a popular choice for orchestrating complex batch workflows. Fivetran excels at simple, batch-based data ingestion (ELT), and dbt has become the go-to for performing transformations inside the data warehouse. While each is strong in its niche, they often need to be stitched together, creating the tool sprawl and complexity discussed earlier.

Real-Time CDC and Stream Processing

This is where Striim occupies a unique position. It is not just another workflow tool or a simple data mover; it is a unified, real-time integration platform. By combining enterprise-grade, log-based Change Data Capture (CDC) for ingestion, a powerful SQL-based stream processing engine for in-flight transformation, and seamless delivery to dozens of targets, Striim replaces the need for multiple disparate tools. It provides a single, cohesive solution for building, managing, and monitoring real-time data pipelines from end to end.

Why Choose Striim for Your Data Pipelines?

Striim delivers real-time data through Change Data Capture (CDC), ensuring sub-second latency from source to target. But it’s about more than just speed. It’s a complete, unified platform designed to solve the most complex data integration challenges. Global enterprises trust Striim to power their mission-critical data pipelines because of its:

- Built-in, SQL-based Stream Processing: Filter, transform, and enrich data in-flight using a familiar SQL-based language.

- Low-Code/No-Code Flow Designer: Accelerate development with a drag-and-drop UI and automated data pipelines, while still offering extensibility for complex scenarios.

- Multi-Cloud Delivery: Seamlessly move data between on-premises systems and any major cloud platform.

- Enterprise-Grade Reliability: Ensure data integrity with built-in failover, recovery, and exactly-once processing guarantees.

Ready to stop wrestling with brittle pipelines and start building real-time data solutions? Book a demo with our team or start your free trial today to discover Striim for yourself.