As enterprises look to adopt AI and deliver better user experiences, traditional batch ETL has become a bottleneck. In a market that demands instant personalization, fraud detection in milliseconds, and up-to-the-second operational intelligence, “next-day data” is no longer enough.

Here is the reality: the problem is structural. Batch processing creates a waiting game. By the time your data is extracted, processed, and loaded, the moment has passed. You are looking at history, not what is happening right now. This gap stops you from reacting when it actually counts. Plus, legacy pipelines are brittle. A single schema change can break the flow, and without deep observability, you are often flying blind.

This guide explores the solution: Change Data Capture (CDC). We will cover what CDC is, the mechanics of how it works, and how to implement a strategy that drives trusted, unified data for your enterprise applications.

What is Change Data Capture (CDC)?

Change Data Capture (CDC) is a data integration process that identifies and tracks changes to data in a source database and delivers them in real time to downstream systems.

CDC solves the “freshness” problem in modern data ecosystems. It eliminates the latency inherent in batch extraction by moving data continuously as transactions occur. This technology is not just a utility. It is foundational to real-time architectures. CDC enables streaming data pipelines, zero-downtime cloud migrations, and the continuous hydration of data lakes and lakehouses. It ensures your analytics and AI models are powered by reality, not a snapshot from yesterday.

How Does Change Data Capture Work?

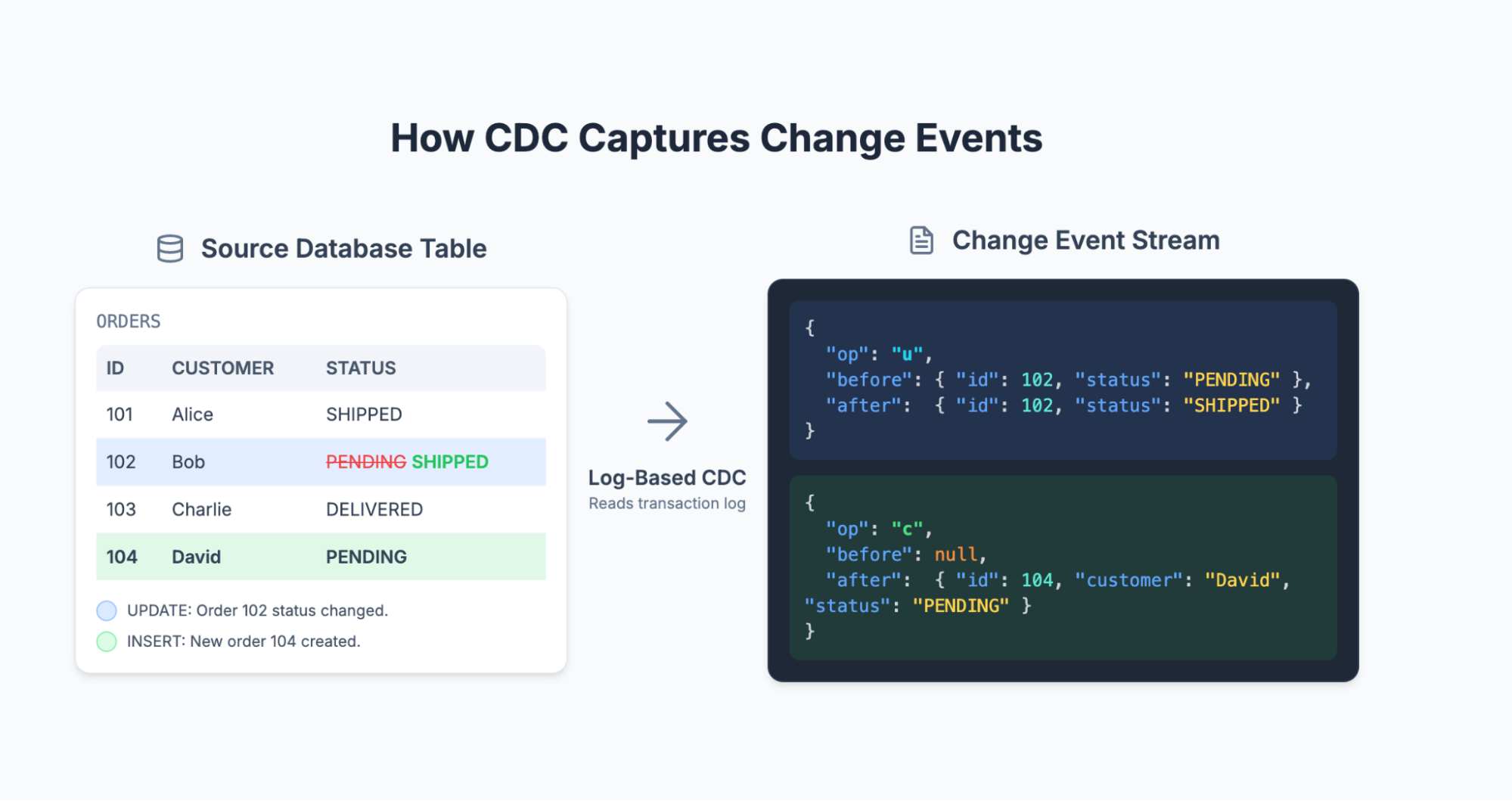

At its core, CDC works by continuously monitoring a source database for changes, capturing those changes in real time, and delivering them to downstream systems or platforms. While the concept is straightforward, the implementation can be technically complex depending on the method used.

How Data Changes Are Identified and Captured

Databases track every insert, update, and delete operation. CDC techniques leverage these internal tracking mechanisms to detect events as they occur. The three primary methods for detection include:

- Querying tables for timestamp changes.

- Using database triggers that fire on specific actions.

- Reading directly from the transaction log.

Each mechanism comes with distinct trade-offs regarding latency and system load. We will explore these in detail in the next section.

What Happens After Change Capture?

Capturing the change is only the first step. Once an event is identified, it flows from the source to a target system. Common real-time destinations include cloud data warehouses like Snowflake and BigQuery, event streaming platforms like Kafka, or data lakehouses.

In modern CDC architectures, changes are not simply moved from point A to point B. They are processed in-stream. This allows teams to filter out irrelevant data, enrich events with context, or transform structures before delivery. This ensures that the destination receives high-quality, consumption-ready data rather than a raw dump of database logs.

Types of CDC Methods

Change Data Capture is a broad approach with multiple implementation strategies. The method you choose dictates the performance impact on your source systems and the operational complexity of your pipeline.

Query-Based CDC

Also known as polling, this method involves repeatedly querying a source table to identify rows with a new timestamp or incremented ID.

While simple to set up, query-based CDC is inefficient. It places a constant, repetitive load on the source database. Critically, it usually fails to capture DELETE operations because the row is no longer present to be queried. This method is rarely suitable for mission-critical or high-volume environments.

Trigger-Based CDC

This method relies on database triggers to capture changes. For each table, specific triggers for INSERT, UPDATE, and DELETE operations write change events to a separate “shadow” or history table.

The primary drawback is performance overhead. Triggers execute with every transaction, adding computational load directly to the database and slowing down the application. Furthermore, managing triggers creates tight coupling between your application and your data infrastructure, making maintenance difficult as schemas evolve.

Log-Based CDC

Log-based CDC is the standard for enterprise data integration. This technique reads changes directly from the database’s native transaction log, such as the redo log in Oracle or the transaction log in SQL Server.

Because the database already writes to this log for recovery purposes, log-based CDC is non-intrusive. It “tails” the file without executing queries against production tables. This results in minimal impact on the source system while providing a complete, ordered record of all changes. This scalability and reliability are why platforms like Striim are built on a streaming-native, log-based CDC architecture.

Key Use Cases for Change Data Capture

CDC in the right circumstances can serve as the engine behind your most critical workloads. From real-time analytics and intelligent apps to seamless cloud modernization, CDC puts your data to work.

Real-Time Analytics and Reporting

Stale data leads to reactive management. CDC feeds dashboards and KPIs with the most up-to-date information available. This allows business users to monitor operations and spot trends as they happen. Striim supports this by ensuring low-latency delivery to high-performance analytics platforms like Snowflake and BigQuery.

Cloud Migration and Hybrid Sync

CDC is crucial for zero-downtime cloud migrations. It allows you to replicate data from on-premises legacy systems to cloud targets without interrupting live operations. Even after migration, many enterprises maintain a hybrid footprint. Striim’s hybrid-ready architecture supports ongoing synchronization between on-prem databases and cloud environments.

Data Lakehouse Hydration

Modern lakehouses require fresh data to be effective. CDC is the standard method to “hydrate” platforms like Databricks and Snowflake with operational data. This approach decouples storage from compute, lowers costs, and improves scalability. It ensures data scientists work with current transactional data rather than aged extracts.

Operational Intelligence

Low-latency data enables intelligent applications to trigger automated actions. For example, streaming transaction data can feed an AI model for real-time fraud detection. Striim powers these use cases by processing data in-flight, moving beyond simple BI reporting to drive automated, intelligent business outcomes.

Benefits of Using CDC

CDC’s potential is about more than a better way to move data. Fresher, faster, more reliable data offers a paradigm shift in how data can unlock critical use cases and strategic advantages.

Timeliness: Fresh Data, Faster

CDC removes the delay between a business event and the ability to act on it. In industries like retail and finance, this speed is a competitive advantage. It allows businesses to shift from reactive analysis of yesterday’s problems to proactive management of today’s opportunities.

Efficiency: Reduced Load on Source Systems

Extracting large datasets in bulk cripples source systems. Log-based CDC is lighter. By reading the transaction logs, it avoids heavy queries on production tables. This ensures your operational applications remain performant even during peak data replication periods.

Reliability: Reduced Risk of Data Loss

CDC ensures consistency between source and target systems. Because it captures every transaction from the log, it offers a complete audit trail of data changes. Advanced CDC platforms include exactly-once delivery guarantees and automated error handling to prevent data mismatch or loss during transmission.

Scalability: Built for Modern Architectures

Modern data environments are distributed and high-volume. CDC is designed to handle this scale. It streams data continuously rather than in massive spikes, allowing downstream systems to ingest changes at a steady, manageable rate. This architecture supports high-throughput environments without requiring massive windows of downtime for batch processing.

Best Practices for CDC Success

Implementing CDC requires a strategy to handle challenges like schema drift, pipeline observability, and data quality. Teams often struggle when they treat real-time pipelines like batch jobs. To build for scale, focus on these core practices.

Design for Change

Source schemas will change. Columns will be added, and data types will be modified. If your pipeline is brittle, these changes will break it. Use a CDC solution that integrates with a schema registry or has built-in drift detection. Striim, for example, can automatically propagate DDL changes to the target or alert administrators before a pipeline fails.

Build for Scale

Avoid point-to-point connections that create a spaghetti architecture. Instead, adopt event-driven patterns. Use message queues like Kafka as a buffer or leverage a platform that can fan out data streams to multiple targets simultaneously. Striim’s “stream anywhere” architecture supports this, allowing you to feed a data lake, a warehouse, and an operational app from a single change stream.

Embrace the “Read Once, Stream Anywhere” Pattern

Reading from the source database is the most expensive part of the process. Do it once. Capture the change stream a single time and then route that data to multiple destinations. This reduces the load on your source systems, minimizes failure points, and maximizes the reuse of your data assets.

Monitor and Validate Continuously

Observability is non-negotiable for real-time pipelines. Implement automated validation to compare source and target row counts or checksums. Ensure you have dashboards that track latency and throughput. Striim provides built-in monitoring and alerting to identify anomalies before they impact the business.

Ensure Governance and Data Quality

Real-time data must be secure. Ensure your CDC pipeline supports encryption in transit and at rest. Implement role-based access control (RBAC) to govern who can view or modify the data streams. Tie your CDC strategy to your enterprise governance policies to ensure compliance with regulations like GDPR and HIPAA.

Choosing the Right CDC Tool

The market is crowded with CDC options. They generally fall into four categories: open-source frameworks, ELT tools with CDC add-ons, cloud-native migration tools, and unified streaming platforms like Striim. When evaluating tools, look beyond the marketing. Focus on latency, breadth of supported sources and targets, schema drift handling, and total cost of ownership.

Striim vs. Debezium

Debezium is a popular open-source framework for CDC. It is developer-centric and requires significant engineering effort to configure, scale, and manage. It lacks a native UI and built-in support. Striim is an enterprise-grade platform that offers the same log-based capture but wraps it in a unified interface with management, monitoring, and support included.

Striim vs. Fivetran

Fivetran is excellent for automated, batch-style ELT pipelines into data warehouses. However, its architecture is not designed for true real-time streaming or complex in-flight processing. Striim is built for sub-second latency and high-volume streaming, making it the better choice for operational intelligence and mission-critical workloads.

Striim vs. AWS DMS

AWS Database Migration Service (DMS) is a utility designed primarily to move data into AWS. While useful for simple migrations, it often lacks deep observability and transformation capabilities. Striim offers a neutral, multi-cloud approach with full pipeline visibility and advanced data processing, regardless of where your data lives.

Why Leading Enterprises Choose Striim for Change Data Capture

Striim is the unified real-time data integration platform for the modern enterprise. It goes beyond basic change capture by combining log-based CDC, in-stream processing, and delivery to any target in a single solution.

Designed for hybrid and multi-cloud environments, Striim handles the complexity of legacy on-prem systems and modern cloud platforms with equal ease. With built-in validation, high availability, and end-to-end governance, Striim ensures your data is not just fast, but trusted.

Ready to modernize your data architecture? Book a demo or get started for free today.