If your dashboards are broken, your analytics keep timing out, and your data engineers wind up spending more time fixing broken data pipelines than building new features, you’re not alone.

You’re likely dealing with more data, from more sources, and more real-time business demands than ever before. And in the face of overwhelming demand, getting pipeline architecture right has become more urgent than ever.

Optimal pipeline architecture can unlock a data team’s ability to detect anomalies, deliver excellent customer experiences, and optimize operations in the moment. It relies on a continuous, real-time flow of reliable data. On the flip side, slow, unreliable, or costly data pipelines are no longer just technical challenges for data engineers. They directly translate to missed business opportunities and increased risk.

This guide demystifies modern data pipeline architecture. We’ll break down the core components, explore common architectural patterns, and walk through the use cases that demand a new approach.

By the end, you’ll have a clear framework for designing and building the resilient, scalable, and cost-efficient data pipelines your business needs to thrive—and understand how modern tools like Striim are purpose-built to simplify and accelerate the entire process.

What is Data Pipeline Architecture?

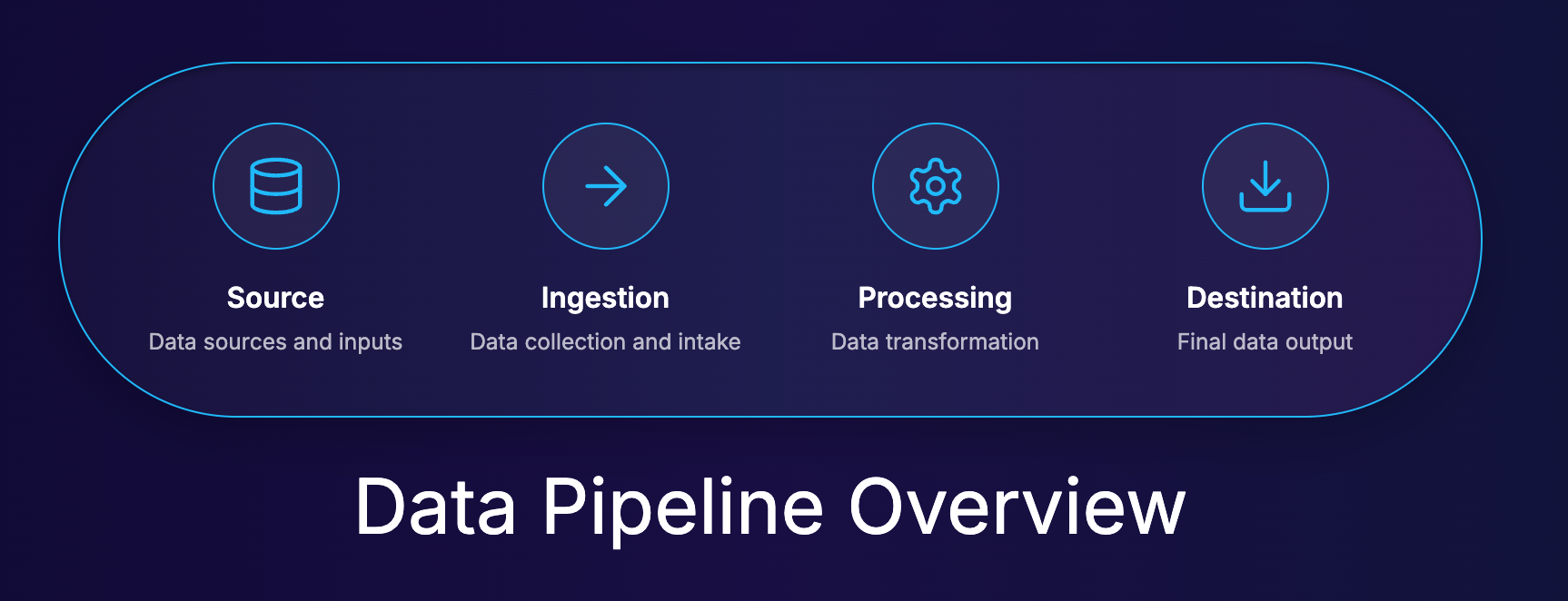

At its core, data pipeline architecture refers to the design and structure of how data is collected, moved, transformed, and delivered from various sources to a final destination. Think of it as the digital circulatory system for your organization’s data.

But a modern pipeline is much more than a simple conveyor belt for data. It’s about moving data with speed, reliability, and intelligence. The critical distinction today is the evolution from slow, periodic batch processing (think nightly ETL jobs) to dynamic, streaming architectures that handle data in near real-time. This shift is fundamental. Where batch ETL answers the question, “What happened yesterday?”, streaming pipelines answer, “What is happening right now?” This enables businesses to be proactive rather than reactive—a key competitive advantage and a necessity in the age of AI.

This evolution sets the stage for a deeper conversation about the building blocks and patterns that define a robust, future-proof data pipeline architecture.

Core Components of a Data Pipeline Architecture

Every data pipeline—whether batch, stream processing, or hybrid—is constructed from the same set of fundamental building blocks. Understanding these components is the first step toward designing, troubleshooting, and scaling your infrastructure for effective data management.

Data Ingestion

This is the starting point where the pipeline collects raw data from its data sources. These sources can be incredibly diverse, ranging from structured databases (like Oracle or PostgreSQL), SaaS applications (like Salesforce), and event streams (like the open source solution, Apache Kafka) to IoT sensors and log files. The key challenge is to capture data reliably and efficiently, often in real time and without impacting the performance of the source systems.

Processing/Transformation

Once ingested, raw data is rarely in the perfect format for downstream analysis or applications. The processing workflow is where data is cleaned, normalized, enriched, aggregated, and transformed for its intended use. Data transformation could involve filtering out irrelevant fields, joining data from multiple sources, converting data types, or running complex business logic. In modern streaming pipelines, this transformation happens in-flight to ensure a continuous flow of data.

Data Storage/Destinations

After processing, the data is delivered to its destination. This could be a cloud data warehouse like Snowflake or BigQuery for analytics, a data lake like Databricks for AI or Machine Learning modeling, a relational database for operational use, or another messaging system for further downstream processing. The choice of destination depends entirely on the use case and the types of data involved.

Orchestration and Monitoring

A pipeline isn’t a “set it and forget it” system. Orchestration is the management layer that schedules, coordinates, and manages the data flows. It ensures that tasks run in the correct order and handles dependencies and error recovery. Monitoring provides visibility into the pipeline’s health, tracking metrics like data volume, latency, and error rates to ensure the system is performing as expected.

Data Governance & Security

This component encompasses the policies and procedures that ensure data is handled securely, accurately, and in compliance with regulations like GDPR or CCPA. It involves managing access controls, masking and encrypting data in transit and at rest, tracking data lineage, and ensuring strong data quality. In modern data architecture, these rules are embedded directly into the pipeline itself.

Common Data Pipeline Architecture Patterns

While the components are the building blocks, architectural patterns are the blueprints. Choosing the right pattern is critical and depends entirely on your specific requirements for latency, scalability, data volume, complexity, and cost. Here are some of the most common pipeline blueprints used today.

Lambda Architecture

A popular but complex pattern, Lambda architecture attempts to provide a balance between real-time speed and batch-processing reliability. It does this by running parallel data flows: a “hot path” (speed layer) for real-time streaming data and a “cold path” (batch layer) for comprehensive, historical batch processing. The results are then merged in a serving layer.

- Best for: Use cases that need both low-latency, real-time views and highly accurate, comprehensive historical reporting.

- Challenge: It introduces significant complexity, requiring teams to maintain two separate codebases and processing systems, which can be costly and difficult to manage.

Kappa Architecture

Kappa architecture emerged as a simpler alternative to Lambda. It eliminates the batch layer entirely and handles all processing—both real-time and historical—through a single streaming pipeline. Historical analysis is achieved by reprocessing the stream from the beginning.

- Best for: Scenarios where most data processing can be handled in real time and the logic doesn’t require a separate batch system. It’s ideal for event-driven systems.

- Challenge: Reprocessing large historical datasets can be computationally expensive and slow, making it less suitable for use cases requiring frequent, large-scale historical analysis.

Event-Driven Architectures

This pattern decouples data producers from data consumers using an event-based model. Systems communicate by producing and consuming events (e.g., “customer_created,” “order_placed”) via a central messaging platform like Kafka. Each microservice can process these events independently, creating a highly scalable and resilient system.

- Best for: Complex, distributed systems where agility and scalability are paramount. It’s the foundation for many modern cloud-native applications.

- Challenge: Can lead to complex data consistency and management challenges across dozens or even hundreds of independent services.

Hybrid and CDC-First Architectures

This pragmatic approach acknowledges that most enterprises live in a hybrid world, with data in both legacy on-premises systems and modern cloud platforms. A Change Data Capture (CDC)-first architecture focuses on efficiently capturing granular changes (inserts, updates, deletes) from source databases in real time. This data can then feed both streaming analytics applications and batch-based data warehouses simultaneously.

- Best for: Organizations modernizing their infrastructure, migrating to the cloud, or needing to sync data between operational and analytical systems with minimal latency and no downtime.

- Challenge: Requires specialized tools that can handle low-impact CDC from a wide variety of database sources.

Use Cases that Demand a Modern Data Pipeline Architecture

Architectural theory is important, but its true value is proven in real-world application. A modern data pipeline isn’t a technical nice-to-have; it’s a strategic enabler. Here are five use cases where a low-latency, streaming architecture proves essential.

Real-Time Fraud Detection

When it comes to detecting and preventing fraud, every second counts. Batch-based systems that analyze transactions hours after they occur are often too slow to prevent losses. A modern, streaming pipeline architecture with Change Data Capture (CDC) is ideal, allowing organizations to intercept and analyze transaction data the moment it’s created.

- With Striim: Businesses can achieve sub-second latency, enabling continuous fraud monitoring models to block suspicious activity before it impacts customers or revenue.

Cloud Migration and Modernization

Migrating from legacy, on-premises databases to the cloud is a high-stakes project. A CDC-first pipeline architecture allows for zero-downtime migrations by keeping on-prem and cloud systems perfectly in sync during the transition. This phased approach de-risks the process and ensures business continuity.

- With Striim: Companies can seamlessly replicate data from legacy systems to cloud targets, supporting phased migrations across complex hybrid environments without interrupting critical operations.

Personalized Customer Experiences

Today’s consumers expect experiences that not only respond to their behaviors, but predict them. Whether it’s an e-commerce site offering a relevant promotion or a media app suggesting the next video, personalization and predictive experiences demand fresh data. Real-time pipelines deliver a continuous stream of user interaction data to marketing and analytics platforms, powering in-the-moment decisions.

- With Striim: Organizations can rapidly deliver enriched customer data to platforms like Snowflake, Databricks, or Kafka, enabling dynamic user segmentation and immediate, personalized engagement.

Edge-to-Cloud IoT Analytics

From factory floors to smart grids, IoT devices generate a relentless stream of data. A scalable, noise-tolerant pipeline is essential for ingesting this high-frequency data, filtering it at the source (the “edge”), and delivering only the most valuable information to cloud analytics platforms.

- With Striim: Teams can deploy lightweight edge processing to filter and aggregate IoT data locally, reducing network traffic and ensuring that cloud destinations receive clean, relevant data for real-time monitoring and analysis.

Operational Dashboards and Alerts

Business leaders and operations teams can’t afford to make decisions based on stale data. When dashboards lag by hours or even just minutes, those insights are already history. Streaming pipelines reduce this data lag from hours to seconds, ensuring that operational dashboards, KPI reports, and automated alerts reflect the true, current state of the business.

- With Striim: By delivering data with sub-second latency, Striim ensures that operational intelligence platforms are always up-to-date, closing the gap between event and insight.

AI-Powered Automation and Generative AI

Whether you’re building a predictive model to forecast inventory or an AI application to power a customer service chatbot, the quality and timeliness of your data is paramount. For LLMs, architectures like Retrieval-Augmented Generation (RAG) depend on feeding the model with real-time, contextual data from your enterprise systems. A streaming data pipeline is the only way to ensure the AI has access to the most current information, preventing it from giving stale or irrelevant answers.

- With Striim: You can feed your AI models and vector databases with a continuous stream of fresh, transformed data from across your business in real time, ensuring your AI applications are always operating with the most accurate and up-to-date context.

Best Practices for Building a Future-Proof Data Pipeline Architecture

Building a robust data pipeline requires a futuristic mindset. In a sense, you’re not just building for today—you’re building for months or years from now, when your use cases, data volumes, and the decision making that relies on your data pipelines will have evolved. Adopting the following best practices will help you avoid getting stuck in endless rebuild projects, and design a smarter, more sustainable data architecture.

Align Architecture to Business SLAs and Latency Goals

Never build in a vacuum. The most important question to ask is: “What business outcome does this pipeline drive, and what are its latency requirements?” The answer will determine your architecture. A pipeline for real-time fraud detection has sub-second requirements, while one for weekly reporting does not. Aligning your technical design with stakeholders and business Service Level Agreements (SLAs) ensures you don’t over-engineer a solution or, worse, under-deliver on critical needs.

Embrace Schema Evolution and Change Data

Change is the only constant. Source systems will be updated, fields will be added, and data types will be altered. A future-proof architecture anticipates this. Use tools and patterns (like CDC) built not only to handle but to thrive on constant change and propagate changes downstream without breaking the pipeline. This builds resilience, enhances data integration, and dramatically reduces long-term maintenance overhead.

Reduce Tool Sprawl by Consolidating the Stack

Many organizations suffer from “tool sprawl”—a complex, brittle collection of disparate point solutions for ingestion, transformation, and delivery. This increases cost, complexity, and points of failure. Seek to consolidate your stack with a unified platform that can handle multiple functions within a single, coherent framework. This simplifies development, monitoring, and data governance.

Prioritize Observability, Data Governance, and Security

Observability, governance, and security are not afterthoughts; they should be core design principles. Build pipelines with observability in mind from day one, ensuring you have clear visibility into data lineage, performance metrics, and error logs. Embed security and governance rules directly into your data flows to ensure compliance and protect sensitive data without creating bottlenecks.

Avoid Overengineering and Focus on Use Case Fit

It can be tempting to build complex, all-encompassing data architecture from the start. A more effective approach is to start with the specific use case and choose the simplest architecture that meets its needs. A Kappa architecture might be perfect for one project, while a simple batch ETL process is sufficient for another. Focus on delivering value quickly and let the architecture evolve as business requirements grow.

Power Your Data Pipeline Architecture with Striim

Designing a modern data pipeline requires the right strategy, the right patterns, and the right platform. Striim is purpose-built to solve the challenges of real-time data, providing a unified, scalable platform that simplifies the entire data pipeline lifecycle. By consolidating the stack, Striim helps you reduce complexity, lower costs, and accelerate time to insight.

With Striim, you can:

- Ingest data in real-time from dozens of sources, including databases via low-impact CDC, cloud applications, and streaming platforms.

- Process and transform data in-flight using a familiar SQL-based language to clean, enrich, and reshape data as it moves.

- Deliver data with sub-second latency to leading cloud data warehouses, data lakes, and messaging systems.

- Build resilient, scalable pipelines on an enterprise-grade platform designed for mission-critical workloads.

Ready to stop fixing broken pipelines and start building for the future? Book a Demo with Our Team or Start Your Free Trial Today