Last week, we hosted a live webinar, Cloud Adoption: How Streaming Integration Minimizes Risks. In just 35 minutes, we discussed how to eliminate database downtime and minimize other risks of cloud migration and ongoing integration for hybrid cloud architecture, including a live demo of Striim’s solution.

Our first speaker, Steve Wilkes, started the presentation discussing the importance of cloud adoption for today’s pandemic-impacted, fragile business environment. He continued with the common risks of cloud data migration and how streaming data integration with low-impact change data capture minimizes both downtime and risks. Our second presenter, Edward Bell, gave us a live demonstration of Striim for zero downtime data migration. In this blog post, you can find my short recap of the key areas of the presentation. This summary certainly cannot do justice to the comprehensive discussion we had at the webinar. That’s why I highly recommend you watch the full webinar on-demand to access details on the solution architecture, its comparison to batch ETL approach, customer examples, the live demonstration, and the interactive Q&A section.

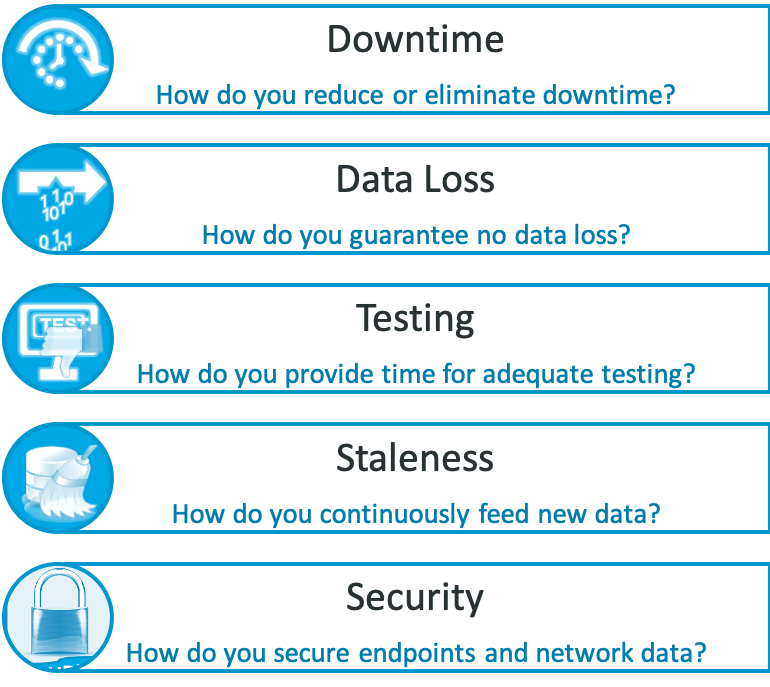

Cloud adoption brings multiple challenges and risks that prevent many businesses from modernizing their business-critical systems.

Limited cloud adoption and modernization reduces the ability to optimize business operations. These challenges and risks include causing downtime and business disruption and losing data during the migration, which are simply not acceptable for critical business systems. The risk list, however, is longer than these two. Switching over to cloud without adequate testing that leads to failures, working with stale data in the cloud, and data security and privacy are also among the key concerns.

Steve emphasized the point that “rushing the testing of the new environment to reduce the downtime, if you cannot continually feed data, can also lead to failures down the line or problems with the application.” Later, he added that “Beyond the migration, how do you continually feed the system? Especially in integration use cases where you are maintaining the data where it was and also delivering somewhere else, you need to continuously refresh the data to prevent staleness.”

Each of these risks mentioned above are preventable with the right approach to data movement between the legacy and new cloud systems.

Streaming data integration plays a critical role in successful cloud adoption with minimized risks.

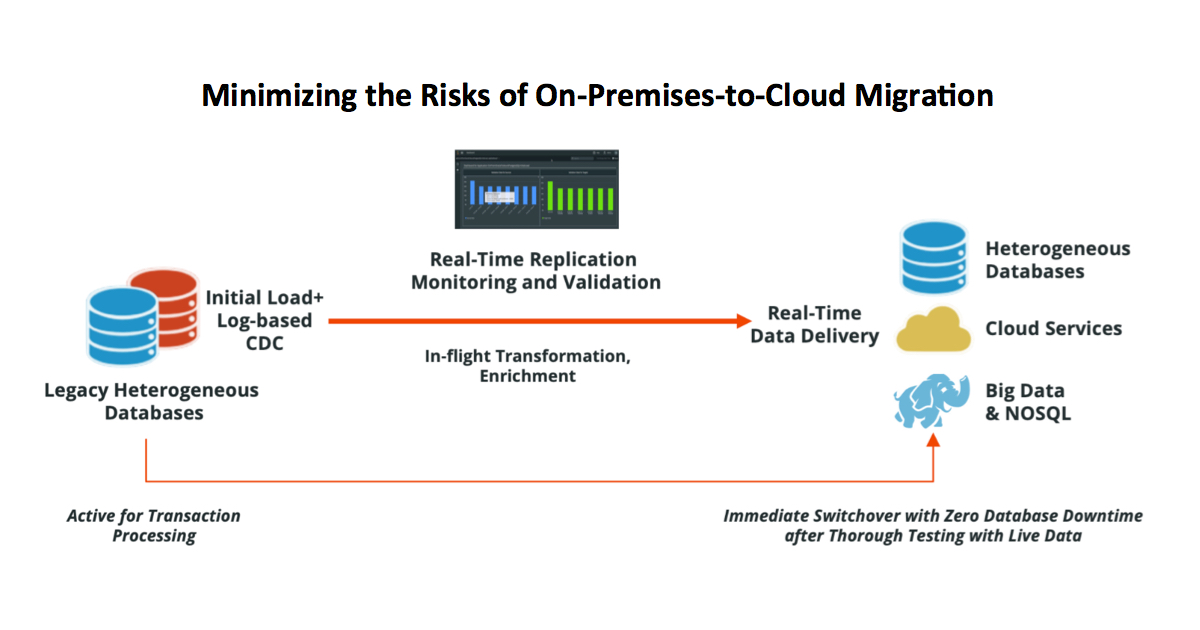

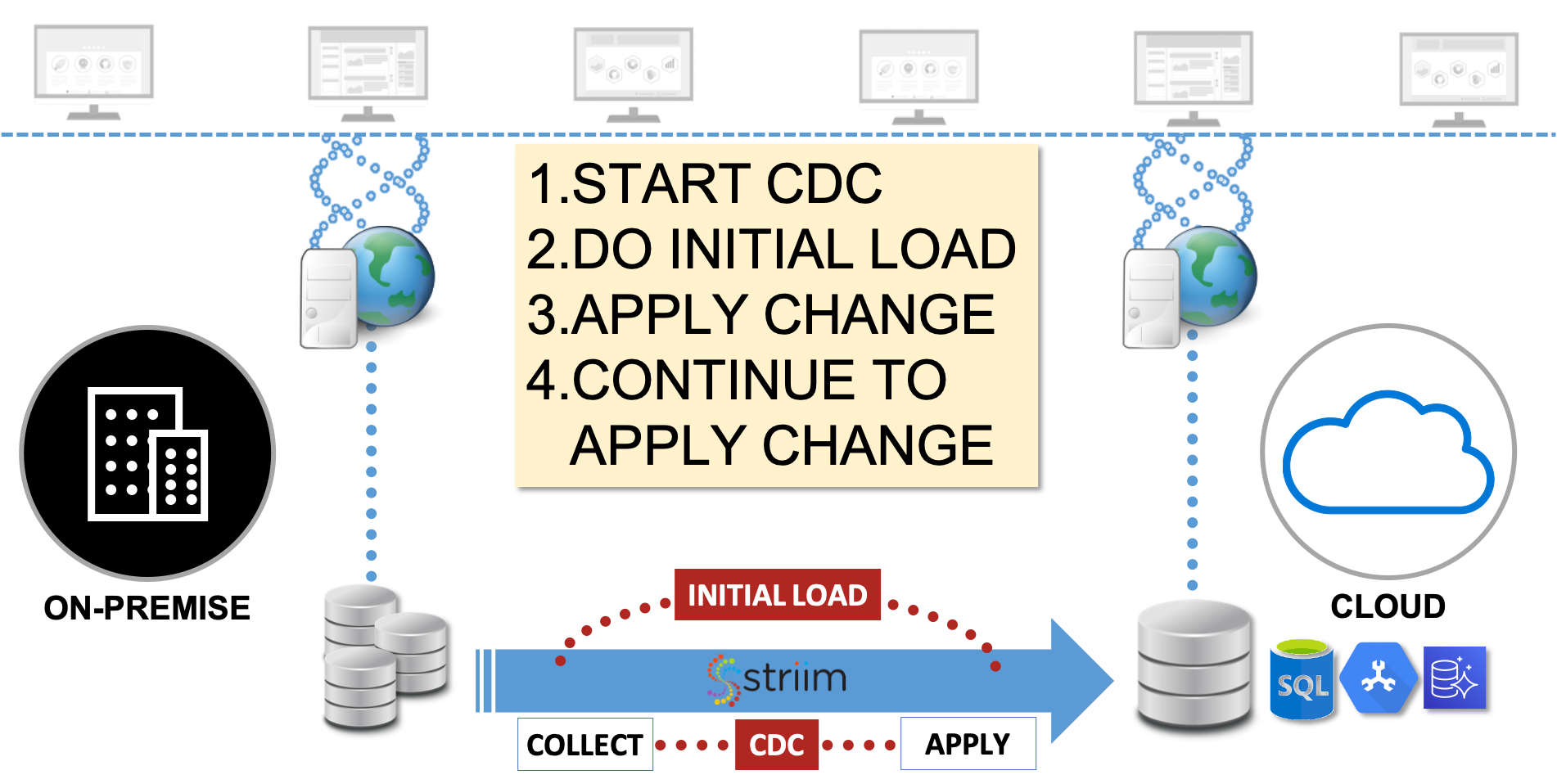

A reliable, secure, and scalable streaming data integration architecture with low-impact change data capture enables zero database downtime and zero data loss during data migration. Because the source system is not interrupted, you can test the new cloud system as long as you need before the switchover. You also have the option to failback to the legacy system after switchover by reversing the data flow and keeping the old system up-to-date with the cloud system until you are fully confident that it is stable.

Striim’s cloud data migration solution uses this modern approach. During the bulk load, Striim’s CDC component collects the source database changes in real time. As soon as the initial load is complete, Striim applies the changes to the target environment to maintain the legacy and cloud database consistency. With built-in exactly once processing (E1P), Striim can avoid data both data loss and duplicates. You have the ability to use Striim’s real-time dashboards to monitor the data flow and various detailed performance metrics.

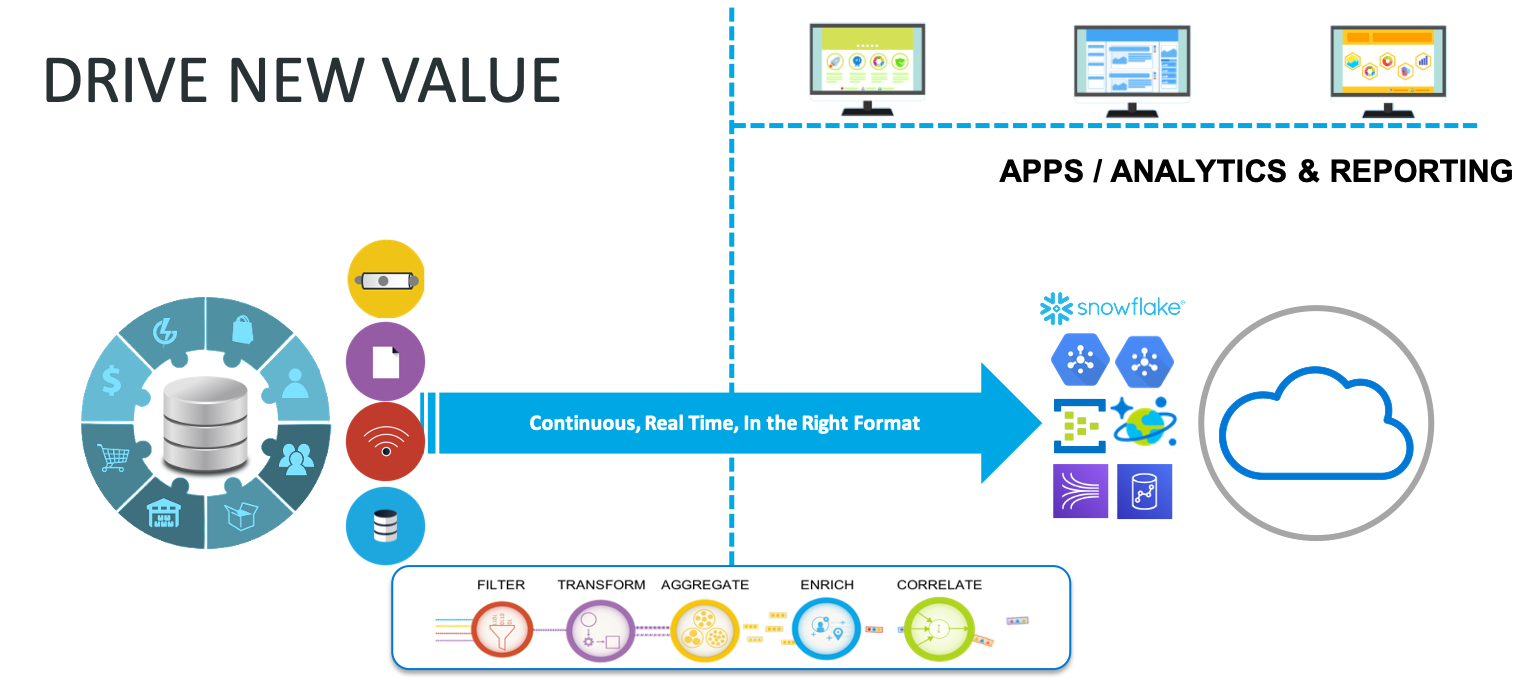

Continuous, streaming data integration for hybrid cloud architecture liberates your data for modernization and business transformation.

Cloud adoption and streaming integration are not limited to the lifting and shifting of your systems to the cloud. Ongoing integration post-migration is a crucial part of planning your cloud adoption. You cannot restrict it to database sources and database targets in the cloud, either. Your data lives in various systems and needs to be shared with different endpoints, such as your storage, data lake, or messaging systems in the cloud environment. Without enabling comprehensive and timely data flow from your enterprise systems to the cloud, what you can achieve in the cloud will be very limited.

“It is all about liberating your data.” Steve added in this part of the presentation. “Making it useful for the purpose you need it for. Continuous delivery in the correct format from a variety of sources relies on being able to filter that data, transform it, and possibly aggregate, join and enrich before you deliver to where needed. All of these can be done in Striim with a SQL-based language.”

A key point both Edward and Steve made is that Striim is very flexible. You can source from multiple sources and send to multiple targets. True data liberation and modernizing your data infrastructure needs that flexibility.

Striim also provides deployment flexibility. In fact, this was a question in the Q&A part, asking about deployment options and pricing. Unfortunately we could not answer all the questions we received. The short answer is: Striim can be deployed in the cloud, on-premises, or both via a hybrid topology. It is priced based on the CPUs of the servers where the Striim platform is installed. So you don’t need to worry about the sizes of your source and target systems.

There is much more covered in this short webinar we hosted on cloud adoption. I invite you to watch it on-demand at your convenience. If you would like to get a customized demo for cloud adoption or other streaming data integration use cases, please feel free to reach out.