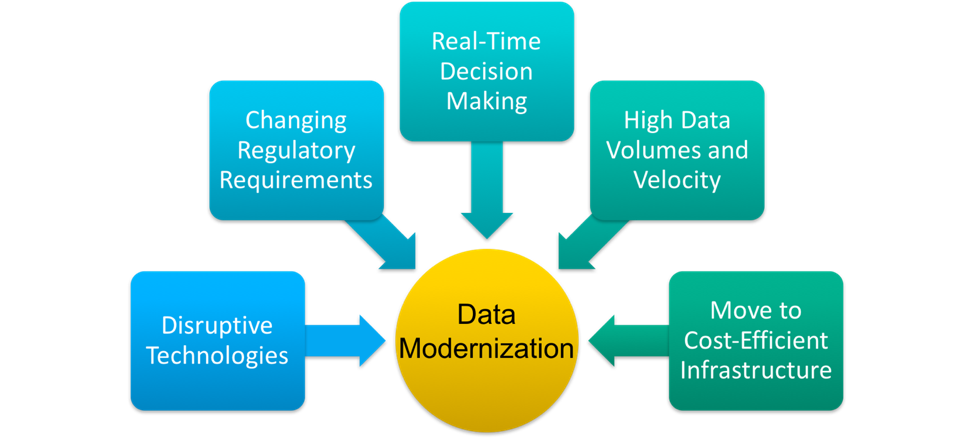

Today, we’re happy to share that Datanami published an article written by Striim’s very own CTO, Steve Wilkes! The piece discusses Data Modernization and goes into detail about some of the enterprise challenges driving companies to think about a modern data architecture, how we got here, and how companies can achieve data modernization in realistic timeframes.

According to Steve, companies need to take many forces into consideration to determine the most appropriate technologies, architectures and processes. Some of these enterprise challenges include dealing with disruptive technologies, changing regulatory requirements, high data volumes and velocity, and real-time decision making. A couple of other considerations include:

- The Speed of Enterprise Technology – Due to technological advancements, enterprise tech cycles have been dramatically reduced, leading businesses to adopt modern technologies due to legacy technology limitations.

- Cost and Competition – While costs are always an issue in updating enterprise technology, leaders are forced to weigh the pros and cons of investing for innovation purposes in order to remain competitive in their market; early adoption by competitors or new players could cause them to lose market share.

Steve went on to discuss the paradigm shift of data management. Whereas from the 1980s to the 2000s, the industry practice involved ETL and batch capture, the last decade or so has provided us with not only the opportunity, but the need to deal with data in real time.

For one, storage was cheap and perceived as almost infinite, while memory and CPU were very expensive. Aside from specific industries that needed to analyze data in real time, the notion of being able to process and analyze data the moment it was born was seen to be too far out-of-reach. Therefore, although an artificial construct due to the technological limitations of the time, batch capture was the industry standard in processing and analyzing data.

Today, conversely, memory and CPU prices have become much more manageable, enabling companies to consider a real-time data architecture. This will eventually lead to an actual business requirement because, since the advent of IoT, we’ve discovered that storage is, in fact, not unlimited, and will not be able to physically store all generated data by 2025. If we can’t store all the data, the logical evolution of data management is to deal with it in real time and store only the most critical information.

This all leads to why companies need to begin looking at a ‘streaming first’ architecture.

Real-time, in-memory stream processing of all data can now be a reality and should be a part of any Data Modernization plans. One of the great things about a ‘streaming first’ architecture is that it doesn’t have to happen overnight, and legacy systems don’t need to be ripped and replaced.

The first step is to be able to collect data in a real-time, continuous fashion using Change Data Capture on legacy databases. This enables companies to fully leverage a modern data infrastructure that can meet both the current and future business and technology needs of the enterprise.

Once real-time data pipelines are in place, it opens the door to a number of solutions to industry problems such as synchronizing with hybrid solutions, incorporating edge-processing and analytics to help scale with growing data volumes, and streamlining the integration of newer technologies into your infrastructure, just to name a few.

Learn more about the importance of Data Modernization and adopting a ‘streaming first architecture by reading Steve’s article on Datanami, “Embracing the Future – The Push for Data Modernization Today.”